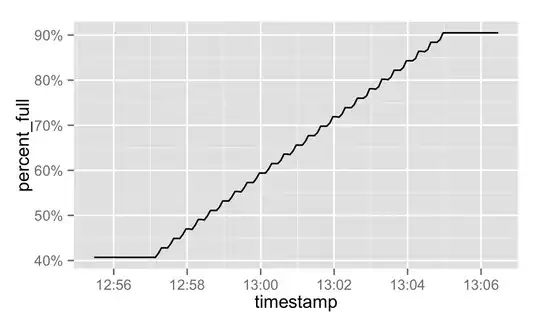

I have a org.apache.solr.hadoop.MapReduceIndexerTool/MorphlineMapper process that fills the local '/' mount.

It runs for a few minutes, the disk fills, Nagios alerts get triggered, and then I kill the process. Once the process is killed, the file utilization drops back down to its base-level of 40%.

This happens fairly quickly and, since it's a production system, there isn't a lot of time to peruse the filesystem to see which files are new. There are also a couple NFS mounts that cause du -sh * to hang. We're running RHEL 6.7.

Is there a smart way to figure out what, exactly, is filling the disk? Perhaps a fast way to capture, diff, and aggregate the file-sizes from lsof? I imagine this is a fairly common scenario and so may be a nice awk one-liner that's in every sysadmin's toolkit.