I have a large job and I'm experimenting with the topology to see what gives better results (playing with ntasks, ncores-per-cpu, nodes, etc.) I'm using slurm as my work queue manager.

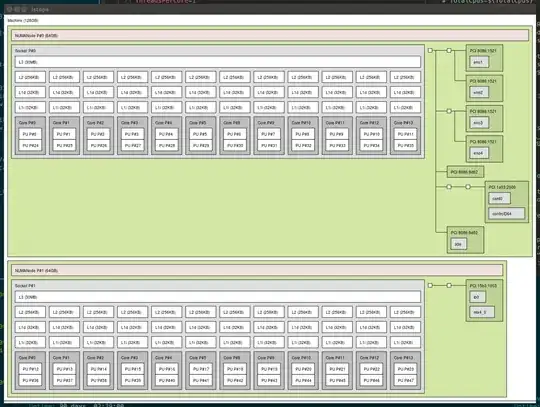

I have two nodes (CentOS 7, managed with Bright 7), each have 2xIntel(R) Xeon(R) CPU E5-2680 v3 @ 2.50GHz. So, two processors with 12 cores, all hyper threaded, so I appear to have 48 cores per node. (See below for the processor topology lstopo gives)

My question: should I disable hyperthreading in the bios, or try to disable it in SLURM? Or just treat my system like I have double the cores? Specifying --thread-per-core=1 doesn't seem to have an effect.

My job is a big environmental model, lots of I/O, lots of matrix calculations, etc, and takes on the order of days right now to run.

I've been reading the SLURM FAQ, but am still confused how to proceed.