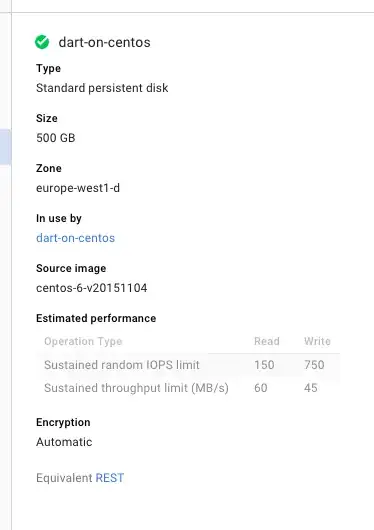

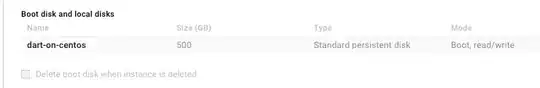

I keep on running out of disk space while trying to compile gcc and I keep on creating larger and larger disk sizes and after 5 hours of compiling, it runs out of disk space. I've resized the disk 4 times now, restarted the compile step for the 4th time now with a 500GB disk.

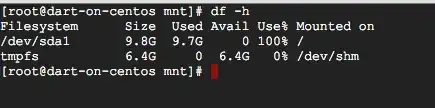

When I ran df -h to see how much space was used, it only says 9.7GB, but that's considered 100%.

I thought there might be another disk, but I'm only seeing sda and its partition

ls /dev/sd*

/dev/sda /dev/sda1

So is my disk actually 500GB in size and df is just reporting it wrong (in which case compiling gcc chows up the whole 500GB) or is Google Cloud's Dashboard reporting it wrong, df reporting it right and compiling gcc is not chowing through 500GB ?

Either way, unless I'm supposed to do something to make use of the 500GB (which is counter-intuitive by the way), I'm guessing this is a bug?

(I've searched before I posted, I've only seen AWS related issues)

UPDATE - lsblk explains it:

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 500G 0 disk

└─sda1 8:1 0 10G 0 part /