I'm working on a project to upgrade web servers to 2012 from 2008. I have the 2012 machines built up and ready to go with site code cloned from the current production 2008 machines, but when load testing I'm finding that the outbound bandwidth on the new ones is much higher than the old ones. I've combed through everything I can think of that would account for this and am up against a dead end. (Compression settings, even head to head comparison of apphost.config web.config machine.config etc) These servers are running identical code, same versions of .NET, of course the big difference is the OS version - but other than that they are identical.

Example: I have one old and one new server with the same load right now, 100 users each routed via load balancer, so traffic should be about equal.

- 2008 bytes in: avg 90kbps

- 2012 bytes in: avg 86kbps

(pretty close to each other, reflecting the fact that they have the same amount of connections, right?) - •2008 bytes out: avg 64kpbs

- •2012 bytes out: avg 136kbps

Not a huge deal at this scale, but this is only 100 users. Multiply this by 40-50k users across our server pool and this outbound bandwidth becomes a huge problem. We want to go live with the new servers next week - help!??

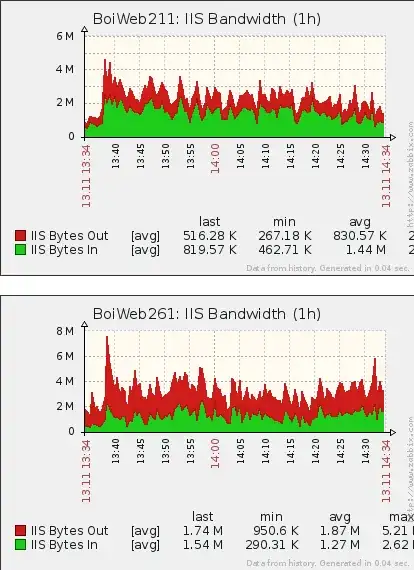

Update: I'm throwing some more traffic at both of them. Here's an illustration of how disproportionate the bandwidth is. Goal is to get the bottom graph to look like the top graph.

Update2: I just cloned an existing 2008 machine and did an in place 2012 upgrade. Bandwidth proportions look good, about the same as the 2008 machine. I still can't find what's different about my new server.