I'm not a (very good) back-end developer so understanding processes and memory are a little about my pay grade.

I'm currently building an app using the MEAN stack. I have a separate Express server running on localhost that is a web scraper.

The flow I have is that my Angular app gathers users data -> sends it to the MEAN express backend -> the Express route sends a POST request to my web scraper, then the scraper does its thing (uses requestjs to get the page, uses cheerio to load in the data and does some parsing of HTML).

The scraping process can take a little while (up to 5 minutes) so I want to send update messages to the browser. Currently I do this:

- Every 5 seconds, the browser sends a

GETrequest to my MEAN API asking it to request an update message from my web scraper - The MEAN API sends a

GETrequest to my web scraper server - The web scraper server checks the progress (just a local variable that is used in function).

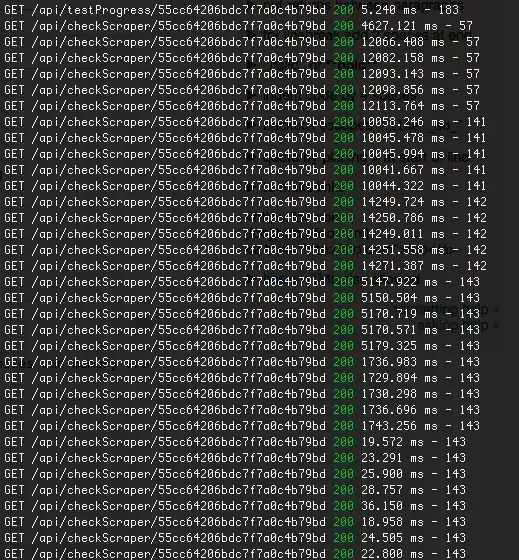

This works, yet while the scraper is running the update responses are VERY slow. See below for a log:

It seems my web scraper server is struggling under load from just 1 user's request (scraping about 1500 websites). I can only imagine that when 10, 20, 1000 users are using the service then the whole thing will just crumble.

Is my flow completely wrong here? I feel like I'm in a bit over my head but I'd like to learn and debug where my web scraper is lagging and see what I can do to optimise it!

EDIT: As per the title - is this an issue where I'm not allocating enough memory to my Node/Express server or something?