I am emulating a network with netem. I wrote a script that changes the network delay according to a linear function from 1 to 50ms every 15 seconds (with a normal variance = 5%). The packet loss is 1%.

How can it be that using ping between two machines, the packet loss rate results to follow the delay curve? Shouldn't it be constant?

this is the ping command:

sudo ping 192.168.0.1 -i 0.01 -w 1 -n -q

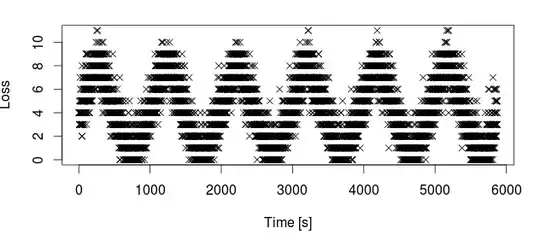

here the graph for the packet loss rate over time.

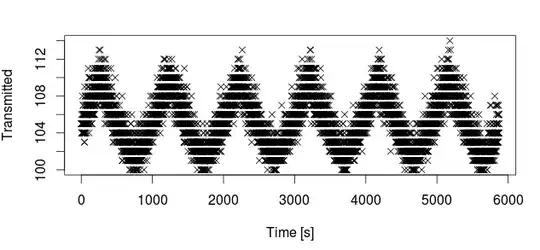

Moreover, even though I set -c 100, the number of transmitted packets is slightly more than such a threshold, while the received packets happens to be exactly 100. Why? Yet on ping man it says:

-c count : Stop after sending count ECHO_REQUEST packets.

here the graph for the packet transmitted over time

--- EDIT I have noticed that if I set packet loss to 0%, this phenomenon is still present. So the losses are due to the delay introduced by netem. Probably the lost packets are the latecomers?