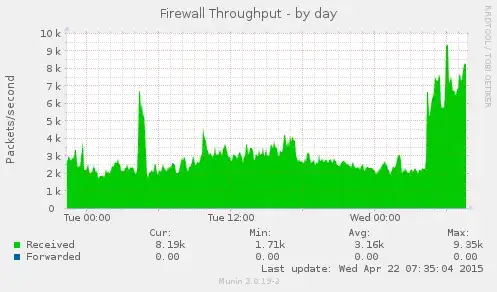

I've just pushed a large change to my backend code and I've noticed a massive increase in the load average in the few hours since the push. I looked at Munin for what the problem might be and I noticed that, along with the load average, the firewall throughput had increased hugely too:

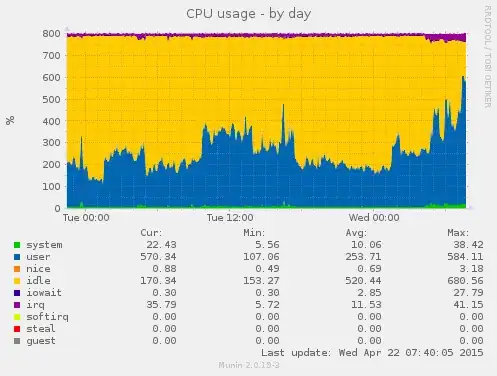

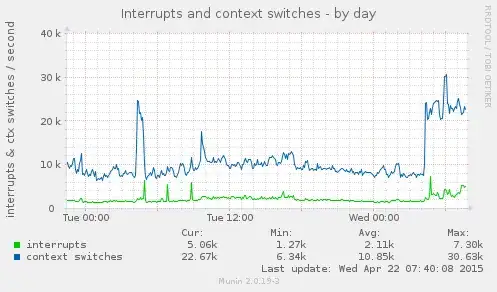

This is along with increases in CPU usage, interrupts and load average, which I've added here for completeness:

Does anyone know what could be going on here? My immediate thought was that the changes to the code put more load on the database (PostgreSQL) but I can't find a reason for the increase in firewall throughput. The traffic has stayed the same, the only difference here is the Python code running under Gunicorn. In htop the highest-CPU process changes between Gunicorn and Postgres, just as it did before (suggesting that Postgres hasn't suddenly become a CPU-hog).

EDIT: This is the output from iptables -L -n -v:

Chain INPUT (policy ACCEPT 298K packets, 357M bytes)

pkts bytes target prot opt in out source destination

7705 516K fail2ban-ssh tcp -- * * 0.0.0.0/0 0.0.0.0/0 multiport dports 22

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 296K packets, 372M bytes)

pkts bytes target prot opt in out source destination

Chain fail2ban-ssh (1 references)

pkts bytes target prot opt in out source destination

17 1720 REJECT all -- * * 58.218.201.19 0.0.0.0/0 reject-with icmp-port-unreachable

16 1228 REJECT all -- * * 210.45.250.3 0.0.0.0/0 reject-with icmp-port-unreachable

7583 505K RETURN all -- * * 0.0.0.0/0 0.0.0.0/0

UPDATE: I rebooted the whole server and the load average climbed back up to around 7 so I guess this means I can rule out issues with the cache having old data after the changes to the DB schema.