We recently upgraded a remote site from a 10/10Mbps fibre to a 20/20Mbps fibre link (it is fibre to the basement, then VDSL from the basement to the office, roughly 30 meters). There are regular large (multi-gig) file copies between this site and a central site, so the theory was that increasing the link to 20/20 should roughtly halve the transfer times.

For transfers for copying files (e.g. using robocopy to copy files in either direction, or Veeam Backup and Recovery's replication) they are capped at 10Mbps.

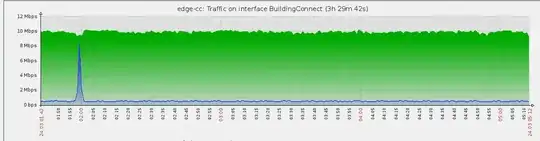

Before upgrade:

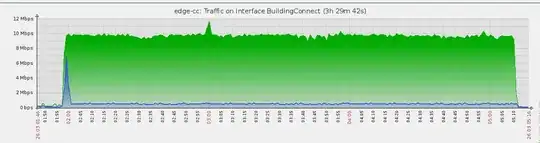

After upgrade (robocopy):

Almost identical (ignore the difference in length of time of the transfer).

The transfers are being done over an IPSec tunnel between a Cisco ASA5520 and a Mikrotik RB2011UiAS-RM.

First thoughts:

- QoS - nope. There are QoS rules but none that should affect this flow. I disabled all the rules for a few minutes to check anyway, and no change

- Software-defined limits. Most of this traffic is Veeam Backup and Recovery shipping off-site, but there are no limits defined in there. Additionally, I just did a straight

robocopyand saw exactly the same statistics. - Hardware not capable. Well, a 5520's published performance figures are 225Mbps of 3DES data, and the Mikrotik doesn't publish numbers but it would be well over 10Mbps. The Mikrotik is at around 25%-33% CPU usage when doing these transfer tests. (Also, doing a HTTP transfer over the IPSec tunnel does hit close to 20Mbps)

- Latency combined with TCP Window size? Well it's 15ms latency between the sites, so even a worst case 32KB window size of

32*0.015is a maximum of 2.1MB/sec. Additionally multiple concurrent transfers still just add up to 10Mbps, which doesn't support this theory - Maybe the source and destination are both shit? Well the source can push 1.6GB/sec sustained sequential reads, so it's not that. The destination can do 200MB/sec sustained sequential writes, so it's not that either.

This is a very odd situation. I've never seen anything manifest quite in this manner before.

Where else can I look?

On further investigation, I'm confident in pointing to the IPSec tunnel as the problem. I made a contrived example and did some tests directly between two public IP addresses on the sites, and then did the exact same test using the internal IP addresses, and I was able to replicate 20Mbps over the unencrypted internet, and only 10Mbps on the IPSec side.

Previous version had a red herring about HTTP. Forget about this, this was a faulty testing mechanism.

As per the suggestion from Xeon and echo'd by my ISP when I asked them for support, I have set up a mangle rule to drop the MSS for the IPSec data to 1422 - based on this calculation:

1422 + 20 + 4 + 4 + 16 + 0 + 1 + 1 + 12

PAYLOAD IPSEC SPI ESP ESP-AES ESP (Pad) Pad Length Next Header ESP-SHA

To fit inside the ISP's 1480 MTU. But alas this has made no effective difference.

After comparing wireshark captures, the TCP session negotiates an MSS of 1380 at both ends now (after tweaking a few things and adding a buffer in case my maths sucks. Hint: it probably does). 1380 is also the ASA's default MSS anyway, so it may have been negotiating this the whole time anyway.

I'm seeing some strange data in the tool inside the Mikrotik that I've been using to measure the traffic. It could be nothing. I didn't notice this before as I was using a filtered query, and I only saw this when I removed the filter.