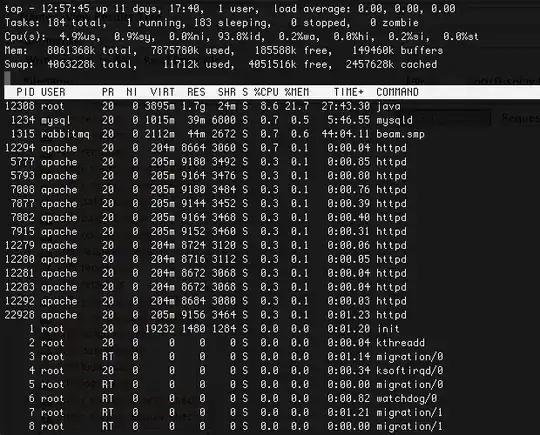

The first thing I would do is reduce the likelyhood that any of those processes can take more CPU or disk IO time than the OS. I am going to assume your OS is linux.

Be sure to back up any config files before editing them.

You may be able to get some hints to the OS behavior just prior to the crash by looking at sar data.

sar -A | more

Be sure to look for a climb in memory or CPU usage. You can have sar run more often by editing /etc/cron.d/sysstat assuming it is installed and enabled.

For each of the service accounts your processes are running as, you could add the following to /etc/security/limits.conf at the end of the file.

apache soft priority 19

apache hard priority 19

rabbitmq soft priority 18

rabbitmq hard priority 18

mysql soft priority 10

mysql hard priority 10

Then in each of the init scripts for your daemons, reduce the CPU and IO time allotted to them.

cp -p /etc/rc.d/init.d/some_init_script ~/`date '+%Y%m%d.%H%M'`.some_init_script

vi /etc/rc.d/init.d/some_init_script

Add the following on the second line of the script to reduce CPU and IO time slices:

renice 19 -p $$ > /dev/null 2>&1

ionice -c3 -p $$ > /dev/null 2>&1

Restart each of your services.

Let's assume that sshd will still become unresponsive. If you install "screen", then you can have vmstat, iotop and other tools running in various screens. There are cheat sheets on using screen so I will not cover that here.

At this point, even if your services are getting out of control, you should still have the ability to ssh to the server assuming it is not triggering a panic.

You can further restrict the resources allocated to each daemon by pinning them to a specific core or CPU. This can be done with the command "taskset". man taskset for more details on its usage.

[edit] I should also add that this won't help under certain spinlock conditions. If the above does not help, you may have to run your applications in a VM and use a debug kernel or other debugging tools.