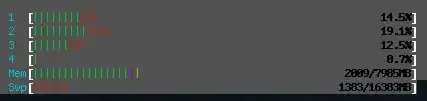

A redis server v2.8.4 is running on a Ubuntu 14.04 VPS with 8 GB RAM and 16 GB swap space (on SSDs). However htop shows that redis alone is taking up 22.4 G of memory!

I do not think the redis database is this large, so why is it taking up so much memory?

Redis version: Redis server v=2.8.4 sha=00000000:0 malloc=jemalloc-3.4.1 bits=64 build=a44a05d76f06a5d9

After restarting redis-server

Update

redis-server eventually crashed due to out of memeory. Mem and Swp both hits 100% then redis-server is killed along with other services.

From dmesg:

[165578.047682] Out of memory: Kill process 10155 (redis-server) score 834 or sacrifice child

[165578.047896] Killed process 10155 (redis-server) total-vm:31038376kB, anon-rss:5636092kB, file-rss:0kB

I guess we really should worry about redis's memory usage getting higher over time! How can we troubleshoot this?