To understand following answer some background required:

Am I performing the tests in a wrong way?

Yes, you're performing test somewhat incorrectly. The problem is that your test is using PERSISTENT connection to send 10 requests. You can easily check it by running following test and you won't have any connection resets (because you send only one request per connection):

httperf --server=127.0.0.1 --port=80 --uri=/ --num-conns=10 --num-calls=1

Why am I getting this connection resets?

If you look at the nginx documentation, you will find this:

Old worker processes, receiving a command to shut down, stop accepting new connections and continue to service current requests until all such requests are serviced. After that, the old worker processes exit.

Which is true, but documentation doesn't mention what is happening with persistent connections. I found answer in the old mailing list. After currently running request is served, nginx will initiate persistent connection close by sending [FIN, ACK] to the client.

To check it, I used WireShark and configured server with one simple worker, which on request sleeps 5 seconds and then replies. I used following command to send request:

httperf --server=127.0.0.1 --port=80 --uri=/ --num-conns=1 --num-calls=2

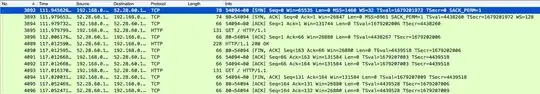

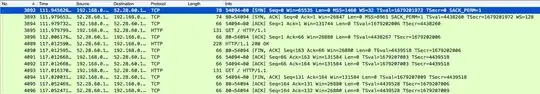

After issuing previously mentioned command I reloaded nginx (while it was handling first request). Here are packages sniffed by WireShark:

- 3892-3894 - usual TCP connection establishment.

- 3895 - client sent first request.

- 3896 - server acknowledges 3895.

- here

nginx reload was executed.

- 4089 - server sent response.

- 4090 - server sent close connection signal.

- 4091 - client acknowledges 4089.

- 4092 - client acknowledges 4090.

- 4093 - client sent second request (WTF?)

- 4094 - client sent close connection signal.

- 4095 - server acknowledges 4093.

- 4096 - server acknowledges 4094.

That's okey, that server didn't send any response to the second request. According to TCP connection termination:

The side that has terminated can no longer send any data into the connection, but the other side can. The terminating side should continue reading the data until the other side terminates as well.

Next question is why 4093 happened after client received close connection signal from server?

Probably this is the answer:

I would say that the POST happens at the same time as the FIN, i.e. the client sent the POST because its TCP stack did not process the FIN from the server yet. Note that packet capturing is done before the data are processed by the system.

I can't comment on this, since I'm not an expert in networking. Maybe somebody else can give more insightful answer why second request was sent.

UPD Previously linked question is not relevant. Asked separate question about the problem.

Is there a solution to this problem?

As were mentioned in the mailing list:

HTTP/1.1 clients are required to handle keepalive connection close, so this shouldn't be a problem.

I think it should be handled on client side. And if connection is closed by server, client should open new connection and retry request.

I actually need a load balancer which I can dynamically add and remove servers from it, any better solutions which fits my problem?

I don't know about other servers, so can't advise here.

As soon as your clients can handle connection close properly there shouldn't be any reason preventing you from using nginx.