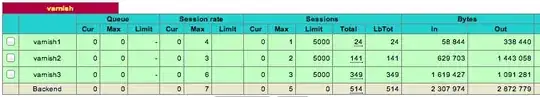

I'm attempting to use HAProxy 1.4.22 with URI balancing and hash-type consistent to load balance between 3 varnish cache backends. My understanding is that this will never accomplish a perfect balance between servers but it should be better than the results I'm seeing.

The relevant part of my HAproxy config looks like:

backend varnish

# hash balancing

balance uri

hash-type consistent

server varnish1 10.0.0.1:80 check observe layer7 maxconn 5000 id 1 weight 75

server varnish2 10.0.0.2:80 check observe layer7 maxconn 5000 id 2 weight 50

server varnish3 10.0.0.3:80 check observe layer7 maxconn 5000 id 3 weight 50

I've been self-testing by pointing my own hosts file at the new proxy server, and I even tried re-routing the popular homepage to a separate backend that's balanced round-robin to get that outlier off the hash balanced backend, that seems to work fine. I boosted varnish1 to a weight of 75 as a test, but it didn't seem to help. My load is being very disproportionately balanced and I don't understand why this is.

One interesting tidbit is that if I reverse the IDs, the higher ID will ALWAYS get the lion's share of the traffic. Why would the ID affect balancing?

Tweaking weights is well and good, but as my site's traffic patterns change (we are a news site and the most popular post can change rapidly) I don't want to have to constantly tweak weights. I understand it'll never be in perfect balance, but I was expecting better results than having one server with a lower weight getting 25 times more connections than another server with a higher weight.

My goal has been to reduce DB and app server load by reducing duplication at the cache level which HAproxy URI balancing is recommended for but if it's going to be this out of balance it won't work for me at all.

Any advice?