Recently I switched to NGINX/PHP-FPM to run my forums.

The majority of the time, the site runs beautifully, seriously quick and I'm really happy with it. Its on a 13 core/30+GB memory instance with AWS, so ample resources (was on 8 core, 16GB before with Apache.)

So, what happens is, the majority of the time we have 6 or 7 PHP-FPM processes and all is well with the world;

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

27676 apache 20 0 499m 34m 19m R 49.2 0.1 0:06.33 php-fpm

27669 apache 20 0 508m 48m 24m R 48.2 0.1 0:10.84 php-fpm

27661 apache 20 0 534m 75m 26m R 45.9 0.2 0:16.18 php-fpm

27671 apache 20 0 531m 69m 21m R 43.9 0.2 0:09.85 php-fpm

27672 apache 20 0 501m 41m 23m R 32.9 0.1 0:09.18 php-fpm

27702 apache 20 0 508m 40m 16m R 23.6 0.1 0:00.94 php-fpm

Well, kinda well. Lots of CPU used but theres only a few of them, so its kinda ok.

Then, seemingly out of nowhere, I spawn a bunch of processes (last time we had 52) and each one is using 8% CPU. You don't need to be good at this to know that 52 * 8 is A LOT.

Now, I have max_children set to 40 now (it was 50.)

pm.max_children = 40

pm.start_servers = 4

pm.min_spare_servers = 2

pm.max_spare_servers = 6

pm.max_requests = 100

Memory limit in php.ini is 128mb.

So, I understand why I get so many processes - thats fine, I have them configured. What I'm curious about is, if we have 8% cpu per process - is that too much? And, maybe my processes are staying alive too long?

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 26575 0.0 0.0 499572 4944 ? Ss 18:23 0:01 php-fpm: master process (/etc/php-fpm.conf)

apache 28161 16.1 0.1 516644 47588 ? S 19:06 0:08 php-fpm: pool www

apache 28164 18.0 0.1 525044 59644 ? S 19:06 0:07 php-fpm: pool www

apache 28166 18.6 0.1 513152 41388 ? R 19:06 0:06 php-fpm: pool www

apache 28167 23.2 0.1 515520 47092 ? S 19:06 0:07 php-fpm: pool www

apache 28168 15.2 0.1 515804 49320 ? S 19:06 0:04 php-fpm: pool www

apache 28171 17.3 0.1 514484 43752 ? S 19:06 0:04 php-fpm: pool www

As I write this, its 7:08PM - so the children processes have been running for 2 minutes and have probably served a lot in that time (Theres 700 people on the forums atm.)

So - super keen to hear advice/criticism/opinions. I've had so much downtime lately I'm verging on setting up Apache again and I'd love to stick with this.

Thanks in advance.

EDIT

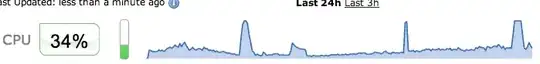

This is the Bitnami graph showing the spikes and how quickly they occur (this is 24h)

EDIT #2

nginx.conf can be found here.

EDIT #3

I bumped my numbers up. Its looking good but still makes me a little nervous.

pm.max_children = 100

pm.start_servers = 25

pm.min_spare_servers = 25

pm.max_spare_servers = 50

pm.max_requests = 500

EDIT #4

So, I've had a few more downtimes and I've setup Splunk and New Relic to help me monitor what is going on. It seems that there is no CPU waiting time and I still have free memory.

top - 17:30:37 up 10 days, 19:20, 2 users, load average: 24.61, 37.34, 25.68

Tasks: 151 total, 20 running, 131 sleeping, 0 stopped, 0 zombie

Cpu0 : 71.8%us, 27.9%sy, 0.0%ni, 0.0%id, 0.0%wa, 0.0%hi, 0.3%si, 0.0%st

Cpu1 : 73.7%us, 26.3%sy, 0.0%ni, 0.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu2 : 70.8%us, 29.2%sy, 0.0%ni, 0.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Cpu3 : 69.3%us, 30.7%sy, 0.0%ni, 0.0%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Mem: 35062648k total, 28747980k used, 6314668k free, 438032k buffers

Swap: 0k total, 0k used, 0k free, 16527768k cached

Watching Splunk it doesn't seem like its traffic - I do get hammered by Googlebot a lot and I was suspicious of it, but nothing concrete.