There's a low probability of complete chassis failure...

You'll likely encounter issues in your facility before sustaining a full failure of a blade enclosure.

My experience is primarily with HP C7000 and HP C3000 blade enclosures. I've also managed Dell and Supermicro blade solutions. Vendor matters a bit. But in summary, the HP gear has been stellar, Dell has been fine, and Supermicro was lacking in quality, resiliency and was just poorly-designed. I've never experienced failures on the HP and Dell side. The Supermicro did have serious outages, forcing us to abandon the platform. On the HP's and Dells, I've never encountered a full chassis failure.

- I've had thermal events. The air-conditioning failed at a co-location facility sending temperatures to 115°F/46°C for 10 hours.

- Power surges and line failures: Losing one side of an A/B feed. Individual power supply failures. There are usually six power supplies in my blade setups, so there's ample warning and redundancy.

- Individual blade server failures. One server's issues do not affect the others in the enclosure.

- An in-chassis fire...

I've seen a variety of environments and have had the benefit of installing in ideal data center conditions, as well as some rougher locations. On the HP C7000 and C3000 side, the main thing to consider is that the chassis is entirely modular. The components are designed minimize the impact of a component failure affecting the entire unit.

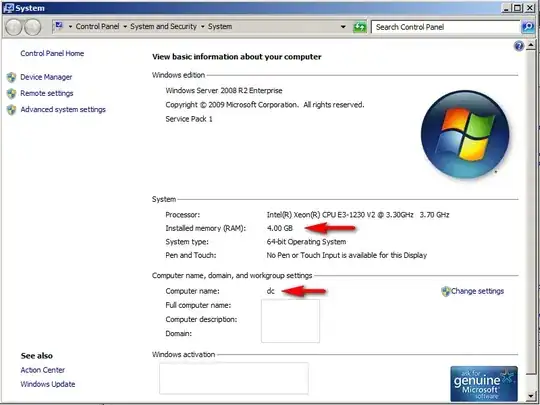

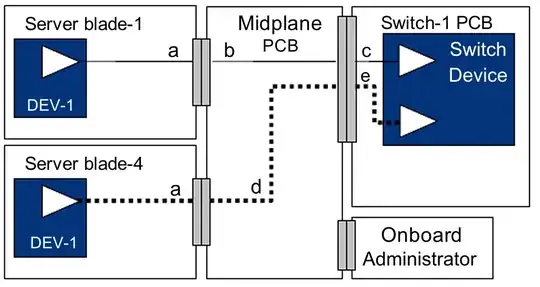

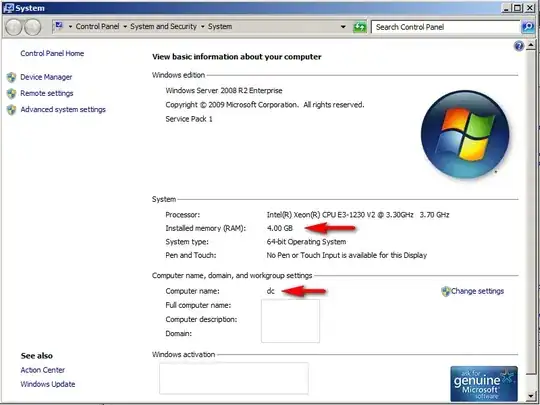

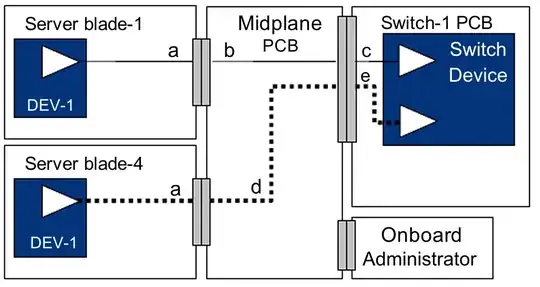

Think of it like this... The main C7000 chassis is comprised of front, (passive) midplane and backplane assemblies. The structural enclosure simply holds the front and rear components together and supports the systems' weight. Nearly every part can be replaced... believe me, I've disassembled many. The main redundancies are in fan/cooling, power and networking an management. The management processors (HP's Onboard Administrator) can be paired for redundancy, however the servers can run without them.

Fully-populated enclosure - front view. The six power supplies at the bottom run the full depth of the chassis and connect to a modular power backplane assembly at the rear of the enclosure. Power supply modes are configurable: e.g. 3+3 or n+1. So the enclosure definitely has power redundancy.

Fully-populated enclosure - rear view. The Virtual Connect networking modules in the rear have an internal cross-connect, so I can lose one side or the other and still maintain network connectivity to the servers. There are six hot-swappable power supplies and ten hot-swappable fans.

Empty enclosure - front view. Note that there's really nothing to this part of the enclosure. All connections are passed-through to the modular midplane.

Midplane assembly removed. Note the six power feeds for the midplane assembly at the bottom.

Midplane assembly. This is where the magic happens. Note the 16 separate downplane connections: one for each of the blade servers. I've had individual server sockets/bays fail without killing the entire enclosure or affecting the other servers.

Power supply backplane(s). 3ø unit below standard single-phase module. I changed power distribution at my data center and simply swapped the power supply backplane to deal with the new method of power delivery

Chassis connector damage. This particular enclosure was dropped during assembly, breaking the pins off of a ribbon connector. This went unnoticed for days, resulting in the running blade chassis catching FIRE...

Here are the charred remains of the midplane ribbon cable. This controlled some of the chassis temperature and environment monitoring. The blade servers within continued to run without incident. The affected parts were replaced at my leisure during scheduled downtime, and all was well.