What is going on here?! I am baffled.

serveradmin@FILESERVER:/Volumes/MercuryInternal/test$ sudo dd if=/dev/zero of=/Volumes/MercuryInternal/test/test.fs bs=4096k count=10000

10000+0 records in

10000+0 records out

41943040000 bytes (42 GB) copied, 57.0948 s, 735 MB/s

serveradmin@FILESERVER:/Volumes/MercuryInternal/test$ sudo dd if=/Volumes/MercuryInternal/test/test.fs of=/dev/null bs=4096k count=10000

10000+0 records in

10000+0 records out

41943040000 bytes (42 GB) copied, 116.189 s, 361 MB/s

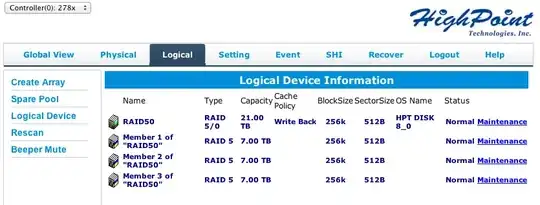

OF NOTE: My RAID50 is 3 sets of 8 disks. - This might not be the best config for SPEED.

OS: Ubuntu 12.04.1 x64

Hardware Raid: RocketRaid 2782 - 24 Port Controller

HardDriveType: Seagate Barracuda ES.2 1TB

Drivers: v1.1 Open Source Linux Drivers.

So 24 x 1TB drives, partitioned using parted. Filesystem is ext4. I/O scheduler WAS noop but have changed it to deadline with no seemingly performance benefit/cost.

serveradmin@FILESERVER:/Volumes/MercuryInternal/test$ sudo gdisk -l /dev/sdb

GPT fdisk (gdisk) version 0.8.1

Partition table scan:

MBR: protective

BSD: not present

APM: not present

GPT: present

Found valid GPT with protective MBR; using GPT.

Disk /dev/sdb: 41020686336 sectors, 19.1 TiB

Logical sector size: 512 bytes

Disk identifier (GUID): 95045EC6-6EAF-4072-9969-AC46A32E38C8

Partition table holds up to 128 entries

First usable sector is 34, last usable sector is 41020686302

Partitions will be aligned on 2048-sector boundaries

Total free space is 5062589 sectors (2.4 GiB)

Number Start (sector) End (sector) Size Code Name

1 2048 41015625727 19.1 TiB 0700 primary

To me this should be working fine. I can't think of anything that would be causing this other then fundamental driver errors? I can't seem to get much/if any higher then the 361MB a second, is this hitting the "SATA2" link speed, which it shouldn't given it is a PCIe2.0 card. Or maybe some cacheing quirk - I do have Write Back enabled.

Does anyone have any suggestions? Tests for me to perform? Or if you require more information, I am happy to provide it!

This is a video fileserver for editing machines, so we have a preference for FAST reads over writes. I was just expected more from RAID 50 and 24 drives together...

EDIT: (hdparm results)

serveradmin@FILESERVER:/Volumes/MercuryInternal$ sudo hdparm -Tt /dev/sdb

/dev/sdb:

Timing cached reads: 17458 MB in 2.00 seconds = 8735.50 MB/sec

Timing buffered disk reads: 884 MB in 3.00 seconds = 294.32 MB/sec

EDIT2: (config details)

Also, I am using a RAID block size of 256K. I was told a larger block size is better for larger (in my case large video) files.

EDIT3: (Bonnie++ Results. Would love some guidance with this!)