So, for various reasons, I've ended up with a 45TB Single Linux Logical volume, without a partition table, formatted as NTFS containing 28TB of data (the Filesystem itself is 28TB).

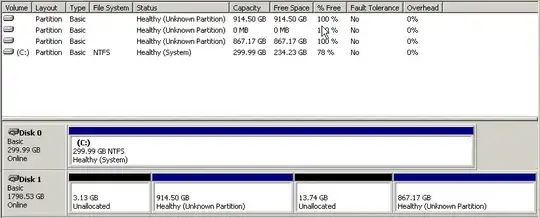

The filesystem was created in Linux, and is mountable by Linux. The problem comes when I try and mount this within a KVM-based Windows VM on the same box. Windows does not see a 28TB filesystem, but a 1.8TB disk containing a few randomly sized unhelpful partitions.

I presume this is because Windows is trying to read the first few bytes of the real NTFS filesystem data as a partition table.

I can see a few possible solutions to this problem, but can't work out how to actually execute any of them:

- Have Windows read an unpartitioned disk (single volume) as a Filesystem?

- Generate a partition table somehow on this Logical Volume without destroying the data that's held within the filesystem itself?

- Somehow fake a partition table, pointing at the LVM volume and export this to the KVM guest (running in libvirt)

The current "partition table" as reported by parted is:

Model: Linux device-mapper (linear) (dm)

Disk /dev/mapper/chandos--dh-data: 48.0TB

Sector size (logical/physical): 512B/512B

Partition Table: loop

Number Start End Size File system Flags

1 0.00B 48.0TB 48.0TB ntfs