Switches do mainly have two forwarding strategies available to them:

- receive a frame completely into the buffer, evaluate the destination address, send frame from buffer to destination

- receive the frame's header into the buffer, evaluate the destination address and make forwarding decision, start sending frame to destination as data comes in

The first is generally referred to as store-and-forward, the second as cut-through.

As you have already noted, there may be many definitions for "latency" in each of these scenarios, but these two are mainly used and even found their way into RFC 1242 (section 3.8):

- First-in-first-out latency, or the time between the reception and sending of the first byte of a particular frame

- Last-in-first-out latency, or the time between the reception of the last frame byte and the sending of the first frame byte

There is also the last-out-last-received method of measurement for end-to-end latency implicitly defined in RFC 2544 section 26.2, but this is very unlikely to appear in vendor's data sheets.

A 2012 whitepaper from Juniper titled "Latency: Not All Numbers Are Measured The Same" (only available from third parties as it has been removed from Juniper's site since) and a number of other sources are suggesting that cut-through-latency is in fact first-in-first-out latency.

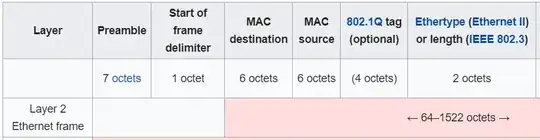

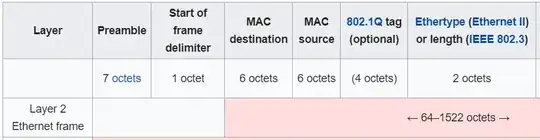

Let's do some numbers. For the switch to be able to make a forwarding decision, it has to at least receive the destination MAC address of the Ethernet frame. Given the Ethernet header, this means to get at least the first 14 bytes (112 bits) of the frame:

At a rate of 10^9 bits per second, this amounts to 112 ns, leaving 188 ns for the forwarding decision at 300 ns latency.

At a rate of 10^9 bits per second, this amounts to 112 ns, leaving 188 ns for the forwarding decision at 300 ns latency.

So, for the Gigabit interface of your FM4224 the figure looks sane assuming the first-in-first-out latency measurement. But obviously, Intel could have chosen a very own definition for its numbers - you would need to ask a sufficiently savvy representative for a definitive statement.