I've been thinking about building a website which could potentially get a lot of traffic. It would be a research site of sorts with wiki capabilities. So users would be able to edit information on the site. However, 99% of users would probably never edit anything and would simply be searching / browsing the site. So they could theoretically do most things from a readonly copy of the database.

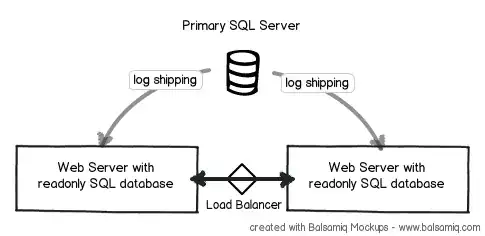

With that in mind, I was thinking that putting together something like this would work well...

So there would be 3 physical servers in the same datacenter:

- The "Primary SQL Server" would receive any UPDATE, INSERT, or DELETE operations.

- The two web servers would have readonly copies of this database, which would be kept up to date with log shipping. These servers would be queried heavily as users browse the site and would also serve up ASP.NET pages through IIS.

I like that this model improves both scalability and reliability at the same time. If either web server goes down there'd be no interruption of service. If the Primary SQL Server goes down, certain functions of the site would be disabled but the site would still be up in a readonly state, so to speak. Then last of all, the load balancer would distribute load between the two web servers, which is nice.

But I have a couple questions about this:

- Is log shipping a good method to accomplish this?

- What is the minimum version of SQL Server required to do this? For example, would it be possible to run SQL Server Web edition on the Primary SQL Server and SQL Express on the web servers?

Thanks for your help.