I recorded my complete installation until failure in case you have a question about the installation which I did not provide an explanation for below: http://www.youtube.com/watch?v=BVe5vja3keo

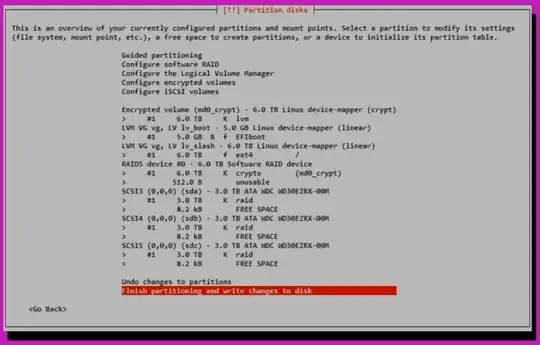

During partitioning I created a software RAID 5 volume spanning three identical disks. On that volume I created an encrypted volume, which I created an LVM inside containing two logical volumes inside a volume group. One logical volume for /boot and one for / (the rest):

When it is time to install Grub to the MBR I get the error Executing grub-install /dev/sda failed. This is a fatal error:

After that I completed the installation without installing a boot loader.

I would greatly appreciate it if someone could help me out!

- I do want redundancy for

/bootso placing it outside the RAID 5 volume is not an option. - I have tried placing a

/bootpartition immediately inside the RAID 5 volume and that doesn´t work automatically either. - If it is possible I´d like the

/bootinside the LVM, but if it is not having it inside the RAID volume would be sufficient. - I know that a software RAID is sub optimal for performance and that a hardware one is preferred. However, my budget does not allow for one and redundancy and encryption are my primary concerns.