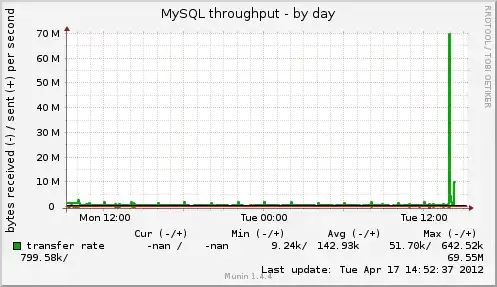

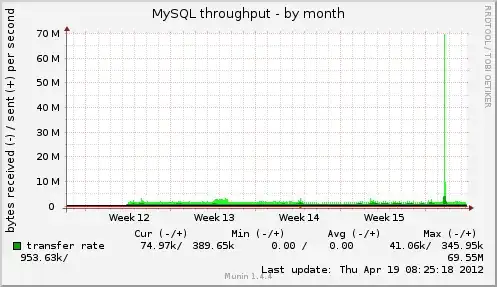

Recently one of our servers has ran out of memory and crashed. After reviewing the munin graphs, it appears that the only metric (other than memory usage) that peaked just before the crash was the MySQL throughput. However we were expecting to see a corresponding increase in number of MySQL queries which didn't happen:

Also from below graph, you can see that the MySQL throughput has reached an abnormally high value, nowhere near any other value reached before:

We're completely in the dark as to how we should proceed, hence the below question:

How to perform a "postmortem" investigation of a MySQL throughput increase?