Lately we have been having a lot of problems with our mysql server, from websites being really slow or even unable to load them at all. The server is a dedicated server that only runs our mysql database. i have been running some test using a profiler (JetProfiler) and tool to stress test (loadUI).

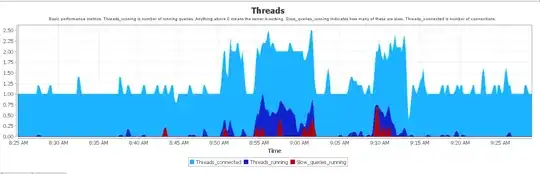

If I use loadUI to connect with 50 simultaneous connections to one of our websites that runs a resently big query it will already make the website be unable to load. One of the things that makes me worried is that when I look at Jetprofile it always shows a Treads_connected of 1.00 and it seems that when it hits around 2.00 that I'm unable to connect.

The 3 big peaks are when I run a test with loadUI, first one was 15 simultaneous connections wich made it still able for me to load the website but just really slow, the second one was 40 simultaneous connections which already made it impossible to load and the third one was with 100 connection which also didn't make it load anymore.

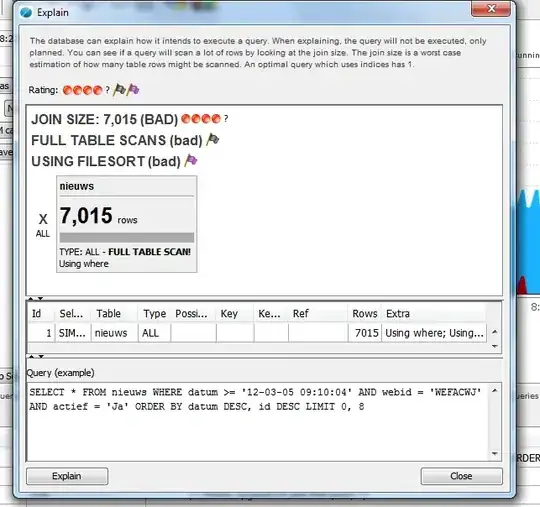

Another thing that worries me is that in JetProfiler it says all the queries that get used are full table scans, could this maybe be the problem? The website I run as a test runs 3 queries, one for a menu that outputs around 1000 rows, one for the adds that has around 560 rows and a big one to get posts that has around 7000 rows (see screenshot bellow)

I also have monitored the cpu of the server and there seems to be no problem there, even when I make a lot of connections with loadui the cpu stays low.

I can't seem to figure out what is the main cause of the websites being unable to load when there is a high amount of traffic, if anyone has other suggestions for testing or something that might cause the problem please let me know.