We are running TeamCity on a Windows Server 2008 as a build server. The build server is hosted in VMWare ESXi 5. (I have very little VMWare experience so my terminology might be wrong).

When we start a build, we more often than not experience extremely poor performance. The build server guest has been assigned 4 CPUs with no upper limit and no other guest systems are very busy.

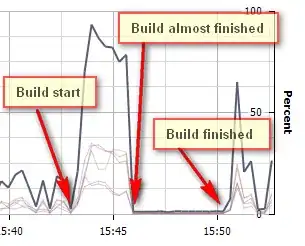

What we have observed using vSphere Client is that after a while the CPU rate drops from about 4600 MHz to about 50 MHz. When the build stops, the CPU frequency goes back to normal semi-idle rate.

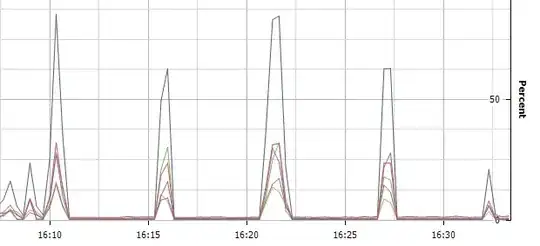

Another interesting observation is that while the build server is working at about 50 MHz it gets a burst of CPU every six minutes (see graph).

Yet another observation is that the system clock loses time proportional to the missing CPU cycles (about a factor 100 in the low-CPU periods).

EDIT Added chart with host specs.