There are many ways to solve this, but the best solution depends on your limitations, environment, types of sites, etc. For example:

- Is a single server not able to handle all sites due to the size of the content on each site, or because of the load/traffic generated?

- Is the content stored as files, or in a database?

- Are the sites running an application that requires shared state? Is the shared state kept in memory or on another server or cluster of servers?

- Does each site require its own deployment of the application, or does the application support multi-tenancy (basically, can it look at what hostname was requested, and use that as its site -- this is how most multi-client hosted CMS/e-commerce applications work)

- Can a single site grow so big that it can't be handled by a single server?

I will assume you have a "typical" web application, with the application hosted in a web server, session state stored locally in memory (the default for many platforms), and a database. I will assume that storage is in the database, and your application supports multi-tenant.

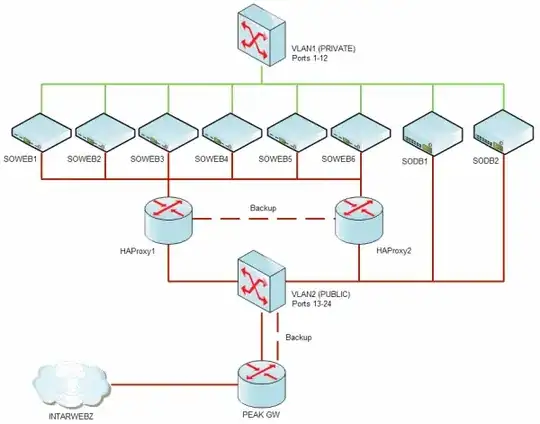

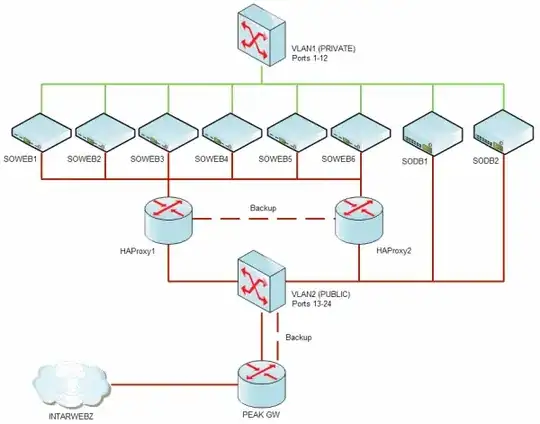

The "typical" way to set this up would be to have multiple tiers:

- Database tier (Very beefy fail-over pair, master/slaves systems, or cluster, depending on your DB platform)

- Session state tier (may be stored in the DB tier, a separate DB, IIS session state server, memcached, or something similar)

- Application tier (your app server -- IIS)

- Load balance tier (dedicated hardware, nginx, HAproxy, etc)

There are several key points to this:

- Any application server is able to handle a request for any "site" (because it's all the same application, and they all connect to the same database)

- Your session state must be shared and available to all app servers

- Your database tier must be able to handle the load of simultaneous access from all app servers. Most people employ lots of caching at various different points in order to make this work.

- If you are aiming for high-availability, you need to have N+1 capacity: eg, if your peak load requires 10 app servers, then you should have at least 11.

- Many times in a big site, people will offload their static content (images, css, etc) to another server or even a CDN like Akami or Amazon CloudFront.

The setup is pretty flexible. You can add a LOT of app servers. You can independently change the setup of any teir. You have no single point of failure (eg, you can tolerate losing a server or two without anyone noticing).

Even if your app doesn't support multi-tenancy, you have a couple options:

- Deploy every site to every server (note: this requires that you have a way of also keeping all files synchronized for installs/updates, and that you don't store any content locally)

- Deploy every site to every server, but store the content on a centralized (and redundant) NAS or SAN

- Deploy sites selectively, and then use your load balancer to direct traffic to the appropriate server based on the hostname.

Take a look at StackExchange's setup, which is actually quite close to what I just described: