I've got a server running on a Linode with Ubuntu 10.04 LTS, Nginx 0.7.65, MySQL 5.1.41 and PHP 5.3.2 with PHP-FPM.

There is a WordPress blog on it, updated to WordPress 3.2.1 recently.

I have made no changes to the server (except updating WordPress) and while it was running fine, a couple of days ago I started having downtimes.

I tried to solve the problem, and checking the error_log I saw many timeouts and messages that seemed to be related to timeouts. The server is currently logging this kind of errors:

2011/07/14 10:37:35 [warn] 2539#0: *104 an upstream response is buffered to a temporary file /var/lib/nginx/fastcgi/2/00/0000000002 while reading upstream, client: 217.12.16.51, server: www.example.com, request: "GET /page/2/ HTTP/1.0", upstream: "fastcgi://127.0.0.1:9000", host: "www.example.com", referrer: "http://www.example.com/"

2011/07/14 10:40:24 [error] 2539#0: *231 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 46.24.245.181, server: www.example.com, request: "GET / HTTP/1.1", upstream: "fastcgi://127.0.0.1:9000", host: "www.example.com", referrer: "http://www.google.es/search?sourceid=chrome&ie=UTF-8&q=example"

and even saw this previous serverfault discussion with a possible solution: to edit /etc/php/etc/php-fpm.conf and change

request_terminate_timeout=30s

instead of

;request_terminate_timeout= 0

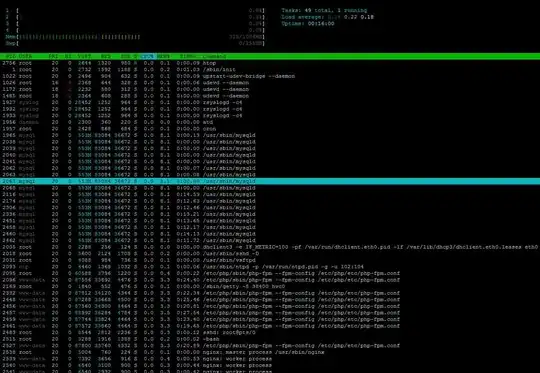

The server worked for some hours, and then broke again. I edited the file again to leave it as it was, and restarted again php-fpm (service php-fpm restart) but no luck: the server worked for a few minutes and back to the problem over and over. The strange thing is, although the services are running, htop shows there is no CPU load (see image) and I really don't know how to solve the problem.

The config files are on pastebin

The /etc/nginx/nginx.conf is here

The /etc/nginx/sites-available/www.example.com is here