I'm not very sure what consumer board to choose for such a configuration.

Im planning to build one or more "Beowulf-like cluster" (starting with one for testing), one such cluster consists of four boxes (commodity Socket-1156 + i7/875K + 2x2GB 1333) in tetrahedral Gbit-Lan topology (direct back-to-back X-link connections).

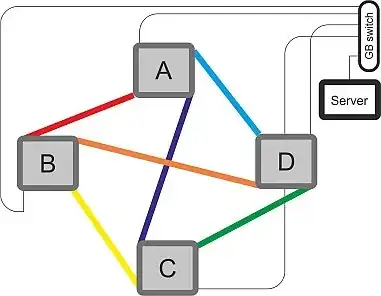

In the image below, each box named A, B, C or D has four Gbit-NIC, one pointing to the upstream Gbit-switch (thin line), three for connections to the remaining boxes (each color denotes one subnet between two NICs):

This is meant to be a reliable €2.5K (probably cheaper) "32-node" compute server running 64-bit Linux and OpenMPI. The server starts numerical simulations on the nodes through OpenMPI, the nodes will communicate through their back-to-back connections.

The Problem: I tested a similar setup already on a "trigonal" cluster (three nodes, each has two additional PCIe-NIC and the onboard Gbit-NIC) successfull one one Board type (Gigabyte P55A-UD3R).

Another Board I tested (Gigabyte P55A-UD4) failed reproducible after some minutes under full network load (but not when in single node mode).

For the above setup, I'd like to use a board that is able to bear the brunt of four simultaneous Gbit links. From my trigonal setup I know that each NIC transfers about 50-80 MB/s any time (iftop).

- Would the tetrahedral topology (as shown above) be possible at all?

- Should I choose boards w/two onboard Gbit-NICSs (expensive)?

- Can PCIe on consumer boards sustain 4 simultaneous Gbit lines?

- Is a bunch of cheap (passive) PCIe-NICs ok?

- Did anybody do something similar and has recommendations?

Thanks & Regards

rbo