Let's say a server is connected to internet through limited bandwidth, and more than 1 user try to download a file from that server simultaneously.

If we ignore the bandwidth limitation at the user side, may I know how the server side bandwidth will be allocated to different users? If there are 2 concurrent users trying to download the same file, will the bandwidth be divided evenly among the users, so each user get 0.5 of the bandwidth?

I've tried the following setup:-

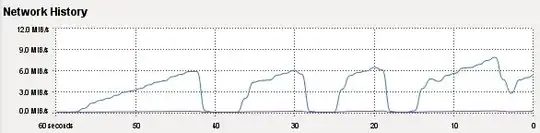

I've connected 2 client PC with Windows XP OS to a switch. From the switch, I connect it to a server PC through a fixed bandwidth of 2mbps. Then, I run iperf in all 3 PC at the same time. The client PC run iperf in client mode, and the server PC run iperf in server mode.

Both the client PC send data to the server PC at the same time.

Then, I found that the server PC get ~500kbps from client PC1 and ~1450kbps from client PC2.

Both the client PC are connected to the switch using 1gbps ethernet connection. Both are using the same type of cable. Both are using the same OS. The settings for iperf are also the same.

I don't understand why there is such a big difference between the bandwidth allocated to client PC1 and client PC2. I would like to know how bandwidth is allocated to concurrent users who are trying to access the server at the same time.

Thanks.