I have installed a Kuberntes system with one node,the node itself worked as both work node and master node,now I met a problem:

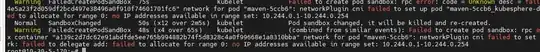

When the pod num exceed 255,Kubernetes will failed to deplopy it,after checking the node it shows there is no available IP address

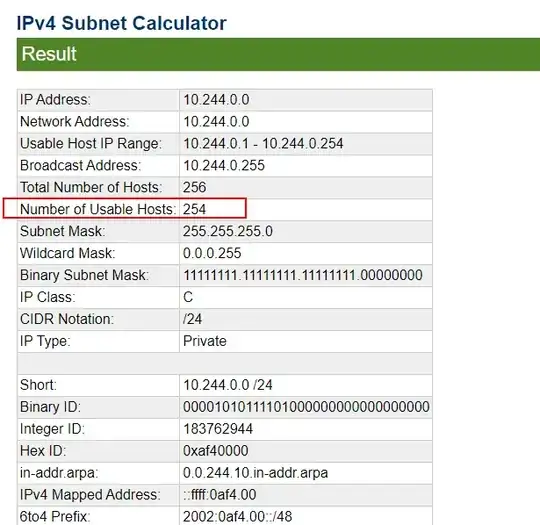

After checking the config,I found it was caused by the node's podCIDR configuration,the value is 10.244.0.0/24

The available subnet number is 254,which cause the problem.

I did a lot of search, all of them said I need to delete the old node and use kube init to create a new node,but I only have one node,if I delete it,my data will also lost.

I am wondering if there is a solution to update the exists node's podCIDR without recreating it?,thanks in advance!