As far as I am aware there is no real alternative to using tools that scan the complete file system to find current disk hogs. Directory meta-data simply doesn't hold enough information about their children and children's children to avoid scanning all sub-directories and files individually and creating a disk usage tally.

du avoidance strategies

Check open file descriptors

Often a first level of approach and assumption is that a single large file being written is the culprit, you can restrict your search to files that are currently open and only check the size of those.

For a detailed approach using lsof see: https://unix.stackexchange.com/q/382693/48232

Something similar would be du -sL /proc/*/fd/* |sort -n and then following the most interesting file descriptor links:

du -sL /proc/*/fd/* |sort -n

...

6344 /proc/873/fd/11

6344 /proc/873/fd/12

6344 /proc/873/fd/13

20140 /proc/359/fd/62

2028890 /proc/876/fd/6

ls -l /proc/876/fd/6

lrwx------. 1 root root 64 Sep 8 10:09 /proc/876/fd/6 -> /var/log/httpd/example.net_access_log

iotop

And similar IO monitoring allows you to identify the process(es) generating the most IO. Once you have the PID you can run ls -l /proc/[PID]/fd/* and see where exactly the process is writing to. This allows you to identify current active processes that may be writing many smaller files rather than a single large file.

Quota

Not fashionable anymore and your mileage may vary, but enabling quota on file systems (beforehand) has several potential advantages.

- set hard- and soft-quota limits and the offending run-away user/process will be halted before completely filling up the file-system and impeding other users/processes

- even with unlimited quota set, disk quota reporting is a quick and easy way to determine who, which user/group ID's are the disk hogs.

Partitioning

Although a PITA in other regards setting users/applications up with their own volume/partition/filesystem(s) rather than using only a single root file-system allows you to run the quick df which limits the amount of searching you need to do.

Quick(er) disk usage insights

Rather than running du --max-depth=2 <some-filesystem> | sort -n or similar (which due to the | pipe only shows results after the complete file system has been scanned) I typically run

du --max-depth=2 <some-filesystem> | tee /tmp/du.txt

in one window and in another:

watch -n 1 "sort -nr < /tmp/du.txt"

which has the advantage that you get to see some immediate progress.

ncdu

For me the du command is often useful enough, it is always installed and more expedient than for example the venerable ncdu , which first needs to build the disk usage cache before presenting you with the admittedly nice and useful navigation TUI that allows you to drill down to the largest directories.

duc

Debian and Ubuntu come with optional duc that still requires you to do an (initial) full-scan of a directory tree / file-system but which also persists that disk usage cache. duc also allows updating that existing disk usage cache, which should be quicker for subsequent runs.

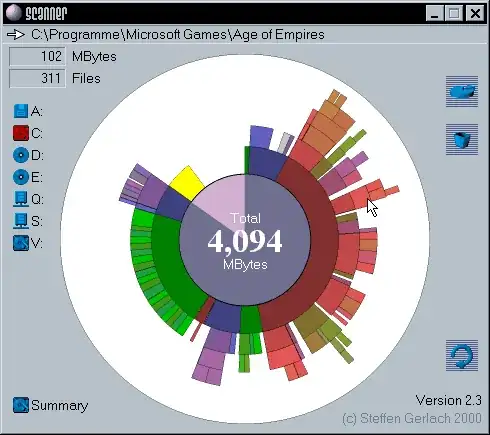

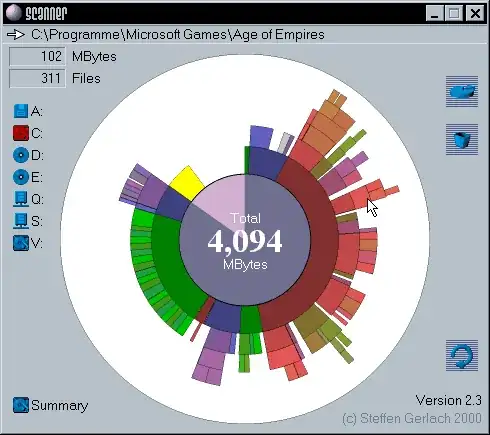

The TUI interface is similar to ncdu but the full GUI offers some really nice usage diagrams. Demo: https://duc.zevv.nl/demo.cgi?x=338&y=181&path=/