I have a service which is registered with two target groups : alb and wwwalb.

The alb target group is for internal requests, and the wwwalb target group is for external requests.

When I deploy my service, it starts up as it should and starts accepting requests. Looking at the access log, I can see that both the alb and wwwalb probes the service. Since the service runs in 3 zones, I see 3 requests for each zone, 6 in total.

- - - [19/Jun/2022:20:45:28 +0200] "GET /api/system/status HTTP/1.1" 204 -

- - - [19/Jun/2022:20:45:28 +0200] "GET /api/system/status HTTP/1.1" 204 -

- - - [19/Jun/2022:20:45:28 +0200] "GET /api/system/status HTTP/1.1" 204 -

- - - [19/Jun/2022:20:45:30 +0200] "GET /api/system/status HTTP/1.1" 204 -

- - - [19/Jun/2022:20:45:30 +0200] "GET /api/system/status HTTP/1.1" 204 -

- - - [19/Jun/2022:20:45:30 +0200] "GET /api/system/status HTTP/1.1" 204 -

Despite this, the service is eventually taken down because the target groups believes the service is unhealthy. In fact, it never seems to think the service is healthy.

An API call to check on the target group tells me the following :

{

"TargetHealthDescriptions": [

{

"Target": {

"Id": "10.1.143.94",

"Port": 8182,

"AvailabilityZone": "eu-north-1b"

},

"HealthCheckPort": "8182",

"TargetHealth": {

"State": "unhealthy",

"Reason": "Target.FailedHealthChecks",

"Description": "Health checks failed"

}

}

]

}

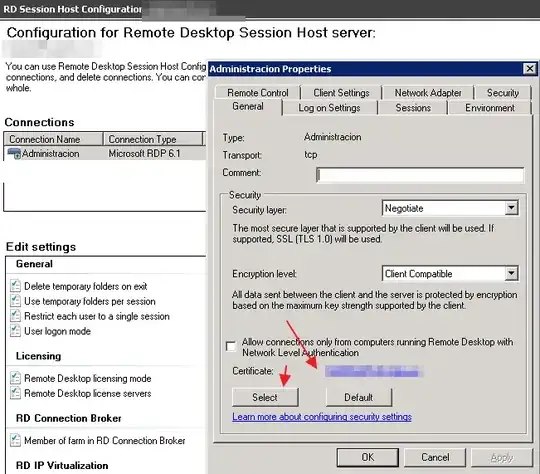

I've been looking at target group metrics, load balancer configurations for a while now - but I simply cannot find anything about the setup which could explain this behaviour. The health check settings seems fine to me as well :

I just recently added the wwwalb, so I'm thinking that somehow having this service in two target groups causes this. Then again, having a service in two target groups is supported and explained by AWS.

Is there a way to get more details from AWS about what's really causing this issue? Any way of looking into why AWS believes the service is failing?