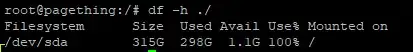

This is driving me crazy! My server run out of space. I cleaned up some files by removing the folders. The amount of free space didn't go up (% wise). This is what I now see:

As you can see, it shows 315gb size, of which 298gb is in use. So why does it show 100% used? The only reason I have the 1.1gb free that you can see if due to removing more files are reboot. Even though I got rid of 15+gb of files before :/

I've tried quite a few things such as lsof +L1:

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NLINK NODE NAME

php-fpm7. 726 root 3u REG 8,0 0 0 605 /tmp/.ZendSem.sRUIJj (deleted)

mysqld 863 mysql 5u REG 8,0 0 0 2938 /tmp/ibj2MjTy (deleted)

mysqld 863 mysql 6u REG 8,0 0 0 10445 /tmp/ibgsRaLu (deleted)

mysqld 863 mysql 7u REG 8,0 0 0 76744 /tmp/ibx2g3Cq (deleted)

mysqld 863 mysql 8u REG 8,0 0 0 76750 /tmp/ib7D93oi (deleted)

mysqld 863 mysql 12u REG 8,0 0 0 77541 /tmp/ibSr0xre (deleted)

dovecot 1278 root 139u REG 0,23 0 0 2021 /run/dovecot/login-master-notify6ae65d15ebbecfbf (deleted)

dovecot 1278 root 172u REG 0,23 0 0 2022 /run/dovecot/login-master-notify4b18cb63ddb75aab (deleted)

dovecot 1278 root 177u REG 0,23 0 0 2023 /run/dovecot/login-master-notify05ff81e3cea47ffa (deleted)

cron 2239 root 5u REG 8,0 0 0 1697 /tmp/#1697 (deleted)

cron 2240 root 5u REG 8,0 0 0 77563 /tmp/#77563 (deleted)

sh 2243 root 10u REG 8,0 0 0 1697 /tmp/#1697 (deleted)

sh 2243 root 11u REG 8,0 0 0 1697 /tmp/#1697 (deleted)

sh 2244 root 10u REG 8,0 0 0 77563 /tmp/#77563 (deleted)

sh 2244 root 11u REG 8,0 0 0 77563 /tmp/#77563 (deleted)

imap-logi 2512 dovenull 4u REG 0,23 0 0 2023 /run/dovecot/login-master-notify05ff81e3cea47ffa (deleted)

imap-logi 3873 dovenull 4u REG 0,23 0 0 2023 /run/dovecot/login-master-notify05ff81e3cea47ffa (deleted)

pop3-logi 3915 dovenull 4u REG 0,23 0 0 2021 /run/dovecot/login-master-notify6ae65d15ebbecfbf (deleted)

pop3-logi 3917 dovenull 4u REG 0,23 0 0 2021 /run/dovecot/login-master-notify6ae65d15ebbecfbf (deleted)

php-fpm7. 4218 fndesk 3u REG 8,0 0 0 605 /tmp/.ZendSem.sRUIJj (deleted)

php-fpm7. 4268 executive 3u REG 8,0 0 0 605 /tmp/.ZendSem.sRUIJj (deleted)

But I can't see anything in there that is locking the files up