I run a python script on VPS and I have problem that script keeps getting killed.

I had this problem already with 2GB RAM server config, then I upgraded to 4 GB and it seemed to fix the problem (at least for 2-3 days - but now happening again) but if I check the memory graphs I see the server load is max 20% of memory and still it is killed.

The output of cmd "grep Killed /var/log/syslog" is the following:

May 8 07:42:04 ubuntu kernel: [307104.437195] Out of memory: Killed process 28187 (python3) total-vm:4503732kB, anon-rss:2885740kB, file-rss:0kB, shmem-rss:0kB, UID:0 pgtables:6780kB oom_score_adj:0

May 8 07:50:46 ubuntu kernel: [307627.171853] Out of memory: Killed process 28387 (python3) total-vm:4569972kB, anon-rss:2887600kB, file-rss:0kB, shmem-rss:0kB, UID:0 pgtables:6772kB oom_score_adj:0

May 8 07:55:18 ubuntu kernel: [ 213.775489] Out of memory: Killed process 1944 (python3) total-vm:4927788kB, anon-rss:3209304kB, file-rss:2460kB, shmem-rss:0kB, UID:0 pgtables:7216kB oom_score_adj:0

May 8 08:01:31 ubuntu kernel: [ 586.779207] Out of memory: Killed process 2111 (python3) total-vm:4593116kB, anon-rss:3205464kB, file-rss:0kB, shmem-rss:0kB, UID:0 pgtables:7156kB oom_score_adj:0

Is there anything i can do?

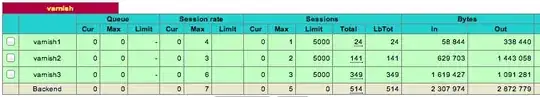

Here is the mem load screenshot. (Yes there are rwo spike 98% MEM but that was only 2 attempts of like 4 when running the scripts. Meaning in other cases it even killed with 20% mem load)

Btw i suppose the times on the image are same as in the log above but you have to do -2h substraction.