I'm in the midst of migrating an application away from a Win2k16 server but it seems the win server doesn't agree to the plan.

When migrating the data, I realized that performance is incredibly bad, i.e. only 5-7 MBit/s whereas it should be 10x that value.

In order to investigate more, I switched from measuring SMB performance to using iperf3 with which I could reproduce the problem. I've a lot of experience with networking and debugging network issues, but this is one of those scenarios that seemingly defy any logic. In other words, I'm stuck and have no idea what's going on and how to tackle the problem.

So I'm looking for any ideas that help to debug, pin down and resolve the issue.

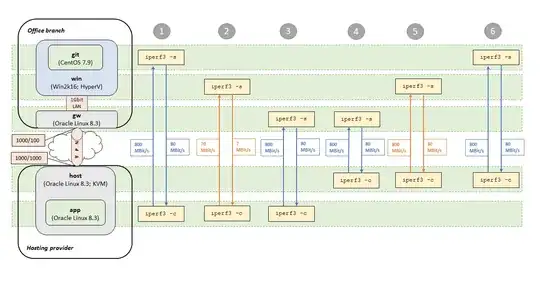

Here's a picture showing the issue:

A VM (app) on the hosting provider side has bad performance connecting to the Hyper-V host (win) in the office (2), whereas the hosting provider host (host) itself works as expected (5). Also the VM has acceptable performance connecting to a Hyper-V VM (git) on the office host (1).

The setup:

- existing branch office:

gwsystem connected to Internet and local LAN; wireguard VPN clientwinpart of local LAN, running HyperV as well- 2 phys LAN adpater, combined with NIC teaming (HyperV-Port balancing)

- External Hyper-V switch set to be shared with host OS

winIP address is set on the virtual adapter coming from this host OS sharinggitHyperV VM, also part of the same Hyper-V switch LAN

- both

winandgitdo not have any special routes and usegwas their default gateway.

- hosting provider:

hostrunning Linux libvirtd/KVM; wireguard VPN serverappLinux VM

- networking:

- branch office LAN: 1Gbit/s

- branch office Internet: 1000 Down / 100 Up

- hosting provider Internet: 1000 Down / 1000 Up

Internet <--> Internetspeed meassured approx.: 900/90 MBit/sVPN <--> Internet <--> Internet <--> VPNspeed meassured approx.: 800/80 MBit/s

- IP networking:

192.168.34.0/24office LAN172.16.48.0/30wireguard VPN tunnel192.168.46.0/30VM network at hosting provider

- L2 networking:

gwhas trunk links (static) and VLAN tagging for Internet accessgwhas trunk links (static) and VLAN tagging for office LAN accesswinhas trunk links (static) with office LAN untagged- No VLANs or trunk links on hosting provider side. host has 1 phys adapter with intenet access

- To ensure no fragmentation issues, MTU on

hostvpn tunnel interface and onappvirtual adapter are both set to 1400.

Here's my reasoning and testing so far:

As speed tests (1) and (3) work as expected, there's no inherent/general problem with networking, i.e. no issue on the Linux software bridge, wireguard VPN tunnel (which isn't fast by itself, but constant in speed).

As speed test (5) works as expected, there's no inherent/general problem with win network access. That's consistent with users not reporting any issues with the application running there.

As seen from the win side, there's not much difference between host or app connecting, except for different IP subnets.

As the only difference seems to be in IP subnets, I thought that maybe some MAC/IP/Port hashing on the switches lead to issues with the trunk links. Therefore I disconnected all trunks links down to one physical link. No change in behaviour.

Also, since win has onboard Broadcom adapters and there're reports with Hyper-V/Broadcom issues wrt virtual machine queues, I disabled the Virtual Machine Queues features on all adapters I could find the setting for (and rebooted the system).

In an attempt to further rule out any relation to the IP subnet of the VM, I tested with another VM in another subnet on the hosting provider side with no success and finally switched from routing to NAT and still no success.

At this point, the win system sees all tests coming from the same IP address, but still behaves differently depending on the actual source. This could indicate some issue/difference in the TCP stack of the app VM vs. host system, but both systems run the exact same OS and are on the same patch level (Oracle Linux 8.3, basically RHEL8, 5.4.17-2036.104.4.el8uek.x86_64).

As a last test, I did a SSH local port forwarding between app and host, i.e. connected to win via host port fowarding and got the desired speed. This is expected, as now the actual connection comes from host again.

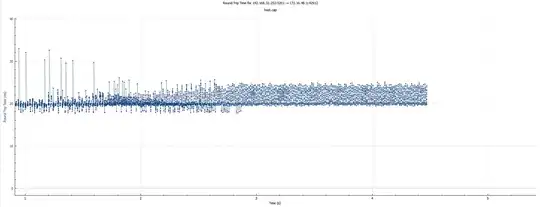

Finally, as seen on the local LAN side of gw, some graphs (blue is Bytes Out and green is Rcv Win):

TCP window size win --> host

TCP window size

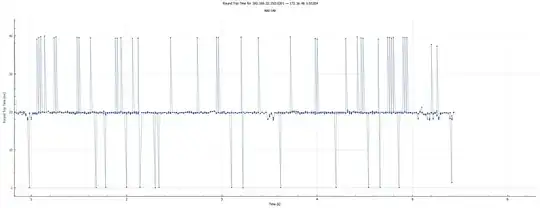

TCP window size win --> app

I'm not sure if that tells much, except the 10x performance difference is visible also in the window size. Also a strange scale-up after 3sec, but without any change in throughput.

TCP RTT win --> host

TCP RTT

TCP RTT win --> app

Note the spikes in both directions in the last graph. Could this indicate some buffers (switch, Hyper-V, local networking stacks, etc.) being full? But then again, why does everything work when doing a git --> app test? Also, a ping test with various different sizes and targets (win, git, gw) from app didn't show any difference (RTT always around 20ms).

So again, any thoughts on what could be done/tested further are highly welcome!