I'm on Ubuntu 20.04 and am running virtual machines (KVM) locally that are attached to a bridge interface on the host. The bridge (and all VMs attached to it) are getting their IPs via DHCP from a DSL/router on the same network.

The bridged interface on the VM host looks like this:

br0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.188.22 netmask 255.255.255.0 broadcast 192.168.188.255

inet6 fe80::2172:1869:b4cb:ec84 prefixlen 64 scopeid 0x20<link>

inet6 2a01:c22:8c21:4200:6dd0:e662:4f46:c591 prefixlen 64 scopeid 0x0<global>

inet6 2a01:c22:8c21:4200:8d92:1ea5:3c93:3668 prefixlen 64 scopeid 0x0<global>

ether 00:d8:61:9d:ad:c5 txqueuelen 1000 (Ethernet)

RX packets 1512101 bytes 2026998740 (2.0 GB)

RX errors 0 dropped 12289 overruns 0 frame 0

TX packets 849612 bytes 1582945488 (1.5 GB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

I've enabled IP forwarding on the host and configured the VMs to use the host as the gateway.

This is how the routes look like inside a VM:

# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.188.22 0.0.0.0 UG 0 0 0 eth0

192.168.188.0 0.0.0.0 255.255.255.0 U 100 0 0 eth0

Routes on the VM host:

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.188.1 0.0.0.0 UG 425 0 0 br0

10.8.0.1 10.8.0.17 255.255.255.255 UGH 50 0 0 tun0

10.8.0.17 0.0.0.0 255.255.255.255 UH 50 0 0 tun0

172.28.52.0 10.8.0.17 255.255.255.0 UG 50 0 0 tun0

192.168.4.0 10.8.0.17 255.255.255.0 UG 50 0 0 tun0

192.168.5.0 10.8.0.17 255.255.255.0 UG 50 0 0 tun0

192.168.10.0 10.8.0.17 255.255.255.0 UG 50 0 0 tun0

192.168.50.0 10.8.0.17 255.255.255.0 UG 50 0 0 tun0

192.168.100.0 10.8.0.17 255.255.255.0 UG 50 0 0 tun0

192.168.188.0 0.0.0.0 255.255.255.0 U 425 0 0 br0

192.168.188.1 0.0.0.0 255.255.255.255 UH 425 0 0 br0

213.238.34.194 192.168.188.1 255.255.255.255 UGH 425 0 0 br0

213.238.34.212 10.8.0.17 255.255.255.255 UGH 50 0 0 tun0

Accessing IPs on the 192.168.188.0/24 network and public internet from inside the virtual machines works fine but I can't seem to figure out how to route traffic from inside the VMs to any of the IPs/networks that are reachable through the "tun0" interface on the VM host itself.

/proc/sys/net/ipv4/ip_forward is set to "1" , I've manually (using iptables -F) flushed all firewall rules from all tables/chains to avoid any interference ... what more do I need to be able to do (for example) "ping 192.168.50.2" from inside one of the virtual machines ?

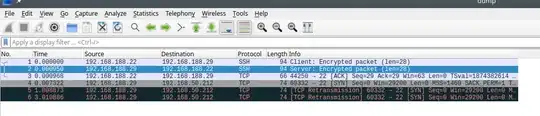

This is what I captured by running "tcpdump -i br0 host " on the VM host while trying to access 192.168.50.212 (one of the machines on the "tun0" network) from inside one of the VMs:

How can I get the VMs attached to the local "br0" interface to also have connectivity with the networks accessible only through the local "tun0" device ?