I don't know if this stackexchange site is the most suited to my question, please flag this for mods to move the question elsewhere if it isn't.

Context & issue

I installed Proxmox on a physical machine (home server), chose ZFS mirror during the installation and created a big Ubuntu server VM taking nearly all resources.

Whenever I make heavy disk I/O in the VM (writing or reading large amounts of data), the RAM usage grows and after it reaches 100%, the VM crashes. When reading the output of htop, it's the orange portion of the RAM that grows, which I understand is the buffer/cache used by kernel and should be recoverable.

Setup

My current setup is the following:

- 24GB RAM

- 2 x 2TB HDD

- Proxmox installed on both drives, ZFS mirror chosen during the install.

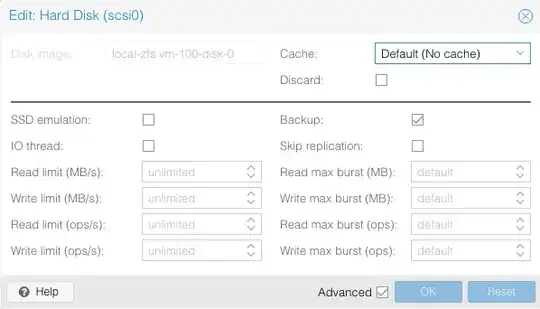

- Ubuntu 20.04 on a VM on Proxmox, taking 1.5TB of space and limited to 20GB of RAM. It's using LVM and ext4, the VirtIO SCSI controller and mostly default values from Proxmox.

Research

I didn't really find anything like this with my favorite search engine but maybe I just didn't use the right keywords

I read that poor disk performance on ZFS might be linked to an inappropriate ashift value. Mine seems to be 12 for disks with a sector size of 4096 bytes, which is ok from what I understand (2^12 = 4096), see code block below.

I tried reducing the RAM allocated to the VM to 16GB in the event that ZFS needed more to handle all this data flow but it just made it crash faster.

The zpool seems healthy as far as I can tell (see code block below).

Initially the Ubuntu install came with an 8GB swap file which I disabled, didn't help.

I'm pretty much out of ideas now. Feel free to point for obvious mistakes or oversight!

I guess my questions are: why does it crash ? and why does the RAM usage grow?

gaugendre@ubuntu:~$ free -h # the "buff/cache" grows

total used free shared buff/cache available

Mem: 19Gi 1.4Gi 4.7Gi 119Mi 13Gi 17Gi

Swap: 0B 0B 0B

root@pm:~# zpool get all | grep ashift

rpool ashift 12 local

root@pm:~# fdisk -l

Disk /dev/sda: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors

Disk model: WDC WD20EFRX-68E

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: D4515220-E091-40B3-B6CC-2FE98C69A5F2

Device Start End Sectors Size Type

/dev/sda1 34 2047 2014 1007K BIOS boot

/dev/sda2 2048 1050623 1048576 512M EFI System

/dev/sda3 1050624 3907029134 3905978511 1.8T Solaris /usr & Apple ZFS

Partition 1 does not start on physical sector boundary.

Disk /dev/sdb: 1.8 TiB, 2000398934016 bytes, 3907029168 sectors

Disk model: WDC WD20EFRX-68E

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: 1A09CBBB-F15C-47BD-A5E6-BC616AA46EB6

Device Start End Sectors Size Type

/dev/sdb1 34 2047 2014 1007K BIOS boot

/dev/sdb2 2048 1050623 1048576 512M EFI System

/dev/sdb3 1050624 3907029134 3905978511 1.8T Solaris /usr & Apple ZFS

Partition 1 does not start on physical sector boundary.

Disk /dev/zd0: 1.5 TiB, 1610612736000 bytes, 3145728000 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 8192 bytes

I/O size (minimum/optimal): 8192 bytes / 8192 bytes

Disklabel type: gpt

Disk identifier: 8F028008-A5B1-4D8A-BF51-825BCC73949F

Device Start End Sectors Size Type

/dev/zd0p1 2048 4095 2048 1M BIOS boot

/dev/zd0p2 4096 2101247 2097152 1G Linux filesystem

/dev/zd0p3 2101248 3145725951 3143624704 1.5T Linux filesystem

root@pm:~# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 1.81T 157G 1.66T - - 0% 8% 1.00x ONLINE -

root@pm:~# zpool status -v

pool: rpool

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-WDC_WD20EFRX-68EUZN0_WD-WCC4M7KVECCZ-part3 ONLINE 0 0 0

ata-WDC_WD20EFRX-68EUZN0_WD-WCC4M3FASL3F-part3 ONLINE 0 0 0

errors: No known data errors

Proxmox OOM kill logs

Feb 02 15:51:39 pm kernel: pve-firewall invoked oom-killer: gfp_mask=0x100cca(GFP_HIGHUSER_MOVABLE), order=0, oom_score_adj=0

Feb 02 15:51:39 pm kernel: CPU: 1 PID: 1496 Comm: pve-firewall Tainted: P O 5.4.73-1-pve #1

Feb 02 15:51:39 pm kernel: Hardware name: To Be Filled By O.E.M. To Be Filled By O.E.M./A320M Pro4-F, BIOS P2.20 07/27/2020

Feb 02 15:51:39 pm kernel: Call Trace:

Feb 02 15:51:39 pm kernel: dump_stack+0x6d/0x9a

Feb 02 15:51:39 pm kernel: dump_header+0x4f/0x1e1

Feb 02 15:51:39 pm kernel: oom_kill_process.cold.33+0xb/0x10

Feb 02 15:51:39 pm kernel: out_of_memory+0x1ad/0x490

Feb 02 15:51:39 pm kernel: __alloc_pages_slowpath+0xd40/0xe30

Feb 02 15:51:39 pm kernel: ? __wake_up_common_lock+0x8c/0xc0

Feb 02 15:51:39 pm kernel: __alloc_pages_nodemask+0x2df/0x330

Feb 02 15:51:39 pm kernel: alloc_pages_current+0x81/0xe0

Feb 02 15:51:39 pm kernel: __page_cache_alloc+0x6a/0xa0

Feb 02 15:51:39 pm kernel: pagecache_get_page+0xbe/0x2e0

Feb 02 15:51:39 pm kernel: ? __switch_to_asm+0x34/0x70

Feb 02 15:51:39 pm kernel: filemap_fault+0x887/0xa70

Feb 02 15:51:39 pm kernel: ? __switch_to_asm+0x34/0x70

Feb 02 15:51:39 pm kernel: ? __switch_to_asm+0x40/0x70

Feb 02 15:51:39 pm kernel: ? __switch_to_asm+0x34/0x70

Feb 02 15:51:39 pm kernel: ? __switch_to_asm+0x40/0x70

Feb 02 15:51:39 pm kernel: ? __switch_to_asm+0x34/0x70

Feb 02 15:51:39 pm kernel: ? __switch_to_asm+0x40/0x70

Feb 02 15:51:39 pm kernel: ? __switch_to_asm+0x34/0x70

Feb 02 15:51:39 pm kernel: ? xas_load+0xc/0x80

Feb 02 15:51:39 pm kernel: ? xas_find+0x17e/0x1b0

Feb 02 15:51:39 pm kernel: ? filemap_map_pages+0x28d/0x3b0

Feb 02 15:51:39 pm kernel: __do_fault+0x3c/0x130

Feb 02 15:51:39 pm kernel: __handle_mm_fault+0xe75/0x12a0

Feb 02 15:51:39 pm kernel: handle_mm_fault+0xc9/0x1f0

Feb 02 15:51:39 pm kernel: __do_page_fault+0x233/0x4c0

Feb 02 15:51:39 pm kernel: do_page_fault+0x2c/0xe0

Feb 02 15:51:39 pm kernel: page_fault+0x34/0x40

Feb 02 15:51:39 pm kernel: RIP: 0033:0x55e4e4e79b18

Feb 02 15:51:39 pm kernel: Code: Bad RIP value.

Feb 02 15:51:39 pm kernel: RSP: 002b:00007ffdaad052a0 EFLAGS: 00010206

Feb 02 15:51:39 pm kernel: RAX: 0000000000000000 RBX: 000055e4e6577260 RCX: 00007f073b0286f4

Feb 02 15:51:39 pm kernel: RDX: 0000000000000000 RSI: 00007ffdaad05260 RDI: 00007ffdaad05260

Feb 02 15:51:39 pm kernel: RBP: 000055e4e657ca78 R08: 0000000000000e02 R09: 0000000000000602

Feb 02 15:51:39 pm kernel: R10: 000000000517e000 R11: 0000000000000246 R12: 000055e4e89ef7b0

Feb 02 15:51:39 pm kernel: R13: 000055e4e657ca70 R14: 0000000000000000 R15: 0000000000000000

Feb 02 15:51:39 pm kernel: Mem-Info:

Feb 02 15:51:39 pm kernel: active_anon:5184091 inactive_anon:16558 isolated_anon:0

active_file:84 inactive_file:0 isolated_file:0

unevictable:1330 dirty:0 writeback:10 unstable:0

slab_reclaimable:13066 slab_unreclaimable:144860

mapped:14964 shmem:17028 pagetables:11872 bounce:0

free:40335 free_pcp:762 free_cma:0

Feb 02 15:51:39 pm kernel: Node 0 active_anon:20736364kB inactive_anon:66232kB active_file:628kB inactive_file:0kB unevictable:5320kB isolated(anon):0kB isolated(file):0kB mapped:59856kB dirty:0kB writeback:40kB shmem:68112kB shmem_thp: 0kB shmem_pmdmapped: 0kB anon_thp: 7663616kB writeback_tmp:0kB unstable:0kB all_unreclaimable? no

Feb 02 15:51:39 pm kernel: Node 0 DMA free:15884kB min:44kB low:56kB high:68kB active_anon:0kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB writepending:0kB present:15996kB managed:15884kB mlocked:0kB kernel_stack:0kB pagetables:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB

Feb 02 15:51:39 pm kernel: lowmem_reserve[]: 0 1357 21897 21897 21897

Feb 02 15:51:39 pm kernel: Node 0 DMA32 free:86332kB min:4184kB low:5572kB high:6960kB active_anon:1333188kB inactive_anon:0kB active_file:0kB inactive_file:4kB unevictable:0kB writepending:0kB present:1497008kB managed:1431132kB mlocked:0kB kernel_stack:0kB pagetables:8kB bounce:0kB free_pcp:248kB local_pcp:0kB free_cma:0kB

Feb 02 15:51:39 pm kernel: lowmem_reserve[]: 0 0 20539 20539 20539

Feb 02 15:51:39 pm kernel: Node 0 Normal free:59628kB min:63348kB low:84380kB high:105412kB active_anon:19403176kB inactive_anon:66232kB active_file:0kB inactive_file:400kB unevictable:5320kB writepending:40kB present:21482752kB managed:21040620kB mlocked:5320kB kernel_stack:6304kB pagetables:47480kB bounce:0kB free_pcp:2980kB local_pcp:28kB free_cma:0kB

Feb 02 15:51:39 pm kernel: lowmem_reserve[]: 0 0 0 0 0

Feb 02 15:51:39 pm kernel: Node 0 DMA: 1*4kB (U) 1*8kB (U) 0*16kB 0*32kB 2*64kB (U) 1*128kB (U) 1*256kB (U) 0*512kB 1*1024kB (U) 1*2048kB (M) 3*4096kB (M) = 15884kB

Feb 02 15:51:39 pm kernel: Node 0 DMA32: 3*4kB (UM) 0*8kB 0*16kB 6*32kB (UM) 8*64kB (UM) 70*128kB (UM) 60*256kB (U) 42*512kB (UM) 39*1024kB (U) 0*2048kB 0*4096kB = 86476kB

Feb 02 15:51:39 pm kernel: Node 0 Normal: 5350*4kB (UME) 460*8kB (UME) 177*16kB (UME) 718*32kB (UME) 112*64kB (UM) 9*128kB (U) 4*256kB (UM) 1*512kB (U) 0*1024kB 0*2048kB 0*4096kB = 60744kB

Feb 02 15:51:39 pm kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=1048576kB

Feb 02 15:51:39 pm kernel: Node 0 hugepages_total=0 hugepages_free=0 hugepages_surp=0 hugepages_size=2048kB

Feb 02 15:51:39 pm kernel: 18053 total pagecache pages

Feb 02 15:51:39 pm kernel: 0 pages in swap cache

Feb 02 15:51:39 pm kernel: Swap cache stats: add 0, delete 0, find 0/0

Feb 02 15:51:39 pm kernel: Free swap = 0kB

Feb 02 15:51:39 pm kernel: Total swap = 0kB

Feb 02 15:51:39 pm kernel: 5748939 pages RAM

Feb 02 15:51:39 pm kernel: 0 pages HighMem/MovableOnly

Feb 02 15:51:39 pm kernel: 127030 pages reserved

Feb 02 15:51:39 pm kernel: 0 pages cma reserved

Feb 02 15:51:39 pm kernel: 0 pages hwpoisoned

Feb 02 15:51:39 pm kernel: Tasks state (memory values in pages):

Feb 02 15:51:39 pm kernel: [ pid ] uid tgid total_vm rss pgtables_bytes swapents oom_score_adj name

Feb 02 15:51:39 pm kernel: [ 683] 0 683 19753 9110 172032 0 0 systemd-journal

Feb 02 15:51:39 pm kernel: [ 755] 0 755 5806 650 69632 0 -1000 systemd-udevd

Feb 02 15:51:39 pm kernel: [ 1005] 106 1005 1705 385 57344 0 0 rpcbind

Feb 02 15:51:39 pm kernel: [ 1006] 100 1006 23270 573 86016 0 0 systemd-timesyn

Feb 02 15:51:39 pm kernel: [ 1020] 0 1020 4878 611 77824 0 0 systemd-logind

Feb 02 15:51:39 pm kernel: [ 1021] 0 1021 93118 287 86016 0 0 lxcfs

Feb 02 15:51:39 pm kernel: [ 1022] 0 1022 41689 511 81920 0 0 zed

Feb 02 15:51:39 pm kernel: [ 1023] 104 1023 2264 444 57344 0 -900 dbus-daemon

Feb 02 15:51:39 pm kernel: [ 1024] 0 1024 68958 296 77824 0 0 pve-lxc-syscall

Feb 02 15:51:39 pm kernel: [ 1025] 0 1025 1022 326 49152 0 0 qmeventd

Feb 02 15:51:39 pm kernel: [ 1026] 0 1026 56455 662 90112 0 0 rsyslogd

Feb 02 15:51:39 pm kernel: [ 1029] 0 1029 3064 656 61440 0 0 smartd

Feb 02 15:51:39 pm kernel: [ 1030] 0 1030 535 240 40960 0 -1000 watchdog-mux

Feb 02 15:51:39 pm kernel: [ 1071] 0 1071 1681 330 49152 0 0 ksmtuned

Feb 02 15:51:39 pm kernel: [ 1137] 0 1137 954 288 45056 0 0 lxc-monitord

Feb 02 15:51:39 pm kernel: [ 1182] 0 1182 1722 61 49152 0 0 iscsid

Feb 02 15:51:39 pm kernel: [ 1187] 0 1187 1848 1257 49152 0 -17 iscsid

Feb 02 15:51:39 pm kernel: [ 1212] 0 1212 1402 350 49152 0 0 agetty

Feb 02 15:51:39 pm kernel: [ 1230] 0 1230 3962 630 69632 0 -1000 sshd

Feb 02 15:51:39 pm kernel: [ 1391] 0 1391 218005 697 208896 0 0 rrdcached

Feb 02 15:51:39 pm kernel: [ 1461] 0 1461 10868 651 86016 0 0 master

Feb 02 15:51:39 pm kernel: [ 1463] 107 1463 10968 597 86016 0 0 qmgr

Feb 02 15:51:39 pm kernel: [ 1465] 0 1465 378386 12276 450560 0 0 pmxcfs

Feb 02 15:51:39 pm kernel: [ 1473] 0 1473 2125 565 53248 0 0 cron

Feb 02 15:51:39 pm kernel: [ 1496] 0 1496 75361 20384 307200 0 0 pve-firewall

Feb 02 15:51:39 pm kernel: [ 1500] 0 1500 568 187 40960 0 0 none

Feb 02 15:51:39 pm kernel: [ 1505] 0 1505 74951 19974 307200 0 0 pvestatd

Feb 02 15:51:39 pm kernel: [ 1541] 0 1541 88255 29812 405504 0 0 pvedaemon

Feb 02 15:51:39 pm kernel: [ 1555] 0 1555 83335 23049 364544 0 0 pve-ha-crm

Feb 02 15:51:39 pm kernel: [ 1632] 33 1632 88663 30378 434176 0 0 pveproxy

Feb 02 15:51:39 pm kernel: [ 1638] 33 1638 17578 12613 184320 0 0 spiceproxy

Feb 02 15:51:39 pm kernel: [ 1640] 0 1640 83246 22949 368640 0 0 pve-ha-lrm

Feb 02 15:51:39 pm kernel: [ 1483] 0 1483 5418704 5111805 41844736 0 0 kvm

Feb 02 15:51:39 pm kernel: [ 15884] 0 15884 90381 30381 430080 0 0 pvedaemon worke

Feb 02 15:51:39 pm kernel: [ 30602] 0 30602 90429 30408 434176 0 0 pvedaemon worke

Feb 02 15:51:39 pm kernel: [ 10126] 0 10126 90380 30339 434176 0 0 pvedaemon worke

Feb 02 15:51:39 pm kernel: [ 21058] 33 21058 17631 12591 176128 0 0 spiceproxy work

Feb 02 15:51:39 pm kernel: [ 21085] 0 21085 21543 371 69632 0 0 pvefw-logger

Feb 02 15:51:39 pm kernel: [ 21097] 33 21097 88702 30316 417792 0 0 pveproxy worker

Feb 02 15:51:39 pm kernel: [ 21098] 33 21098 88663 30272 417792 0 0 pveproxy worker

Feb 02 15:51:39 pm kernel: [ 21099] 33 21099 88702 30278 417792 0 0 pveproxy worker

Feb 02 15:51:39 pm kernel: [ 7252] 107 7252 10956 616 86016 0 0 pickup

Feb 02 15:51:39 pm kernel: [ 32252] 0 32252 1314 153 53248 0 0 sleep

Feb 02 15:51:39 pm kernel: [ 2329] 0 2329 5806 544 65536 0 0 systemd-udevd

Feb 02 15:51:39 pm kernel: [ 2330] 0 2330 5806 542 65536 0 0 systemd-udevd

Feb 02 15:51:39 pm kernel: [ 2331] 0 2331 5806 542 65536 0 0 systemd-udevd

Feb 02 15:51:39 pm kernel: [ 2332] 0 2332 5806 542 65536 0 0 systemd-udevd

Feb 02 15:51:39 pm kernel: [ 4195] 0 4195 5806 653 65536 0 0 systemd-udevd

Feb 02 15:51:39 pm kernel: oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0,global_oom,task_memcg=/qemu.slice/100.scope,task=kvm,pid=1483,uid=0

Feb 02 15:51:39 pm kernel: Out of memory: Killed process 1483 (kvm) total-vm:21674816kB, anon-rss:20445684kB, file-rss:1536kB, shmem-rss:0kB, UID:0 pgtables:40864kB oom_score_adj:0

Feb 02 15:51:39 pm kernel: oom_reaper: reaped process 1483 (kvm), now anon-rss:0kB, file-rss:68kB, shmem-rss:0kB

Feb 02 15:51:40 pm kernel: fwbr100i0: port 2(tap100i0) entered disabled state

Feb 02 15:51:40 pm kernel: fwbr100i0: port 2(tap100i0) entered disabled state

Feb 02 15:51:40 pm systemd[1]: 100.scope: Succeeded.

Feb 02 15:51:43 pm qmeventd[1019]: Starting cleanup for 100

Feb 02 15:51:43 pm kernel: fwbr100i0: port 1(fwln100i0) entered disabled state

Feb 02 15:51:43 pm kernel: vmbr0: port 2(fwpr100p0) entered disabled state

Feb 02 15:51:43 pm kernel: device fwln100i0 left promiscuous mode

Feb 02 15:51:43 pm kernel: fwbr100i0: port 1(fwln100i0) entered disabled state

Feb 02 15:51:43 pm kernel: device fwpr100p0 left promiscuous mode

Feb 02 15:51:43 pm kernel: vmbr0: port 2(fwpr100p0) entered disabled state

Feb 02 15:51:44 pm qmeventd[1019]: Finished cleanup for 100