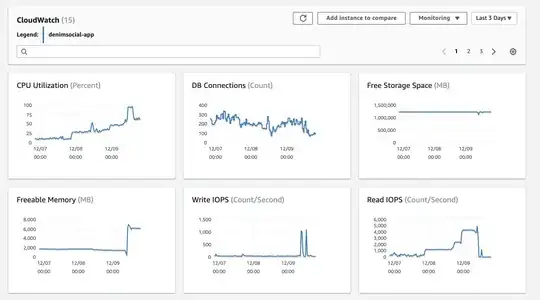

We were hitting the upper limits of our IOPs the last several days. We provisioned more IOPS a few times. 1250 -> 2500 -> 4500. Each Step we quickly saw we were using all the available IOPS as it would max out between the reads and writes.

Finally this morning we had another large customer onboard and our CPU, Memory, and IOPS maxed out. I made the decision to provisoin a larger database type to keep the system running. We went from db.m5.xlarge to db.m5.2xlarge. We also upped IOPs to 8000 with the upgrade.

Now our CPU is getting hammered in comparison, and our IOPS are practically 0. The app seems responsive but our task queues are quite slow.

No code has changed, just the database. What am I missing? Is RDS not reporting correctly?

You'll notice the jumps in read IOPS in the last 3 days, those coorilate with the higher IOPS provisions.