I did some testing today with my autoscaling setup on Azure Kubernetes. I noticed that when an autoscale was triggered, it took a while for the next node to spin up, so the last pod had to wait a long time to be scheduled. I would like to make it so that when my server reaches a certain threshold, new nodes are added, but pods can still be scheduled on the already-running nodes. Is that possible?

1 Answers

To answer the question of how to trigger autoscaling such that a pod does not have to wait for a new node, this article describes an elegant strategy called "Pause Pods":

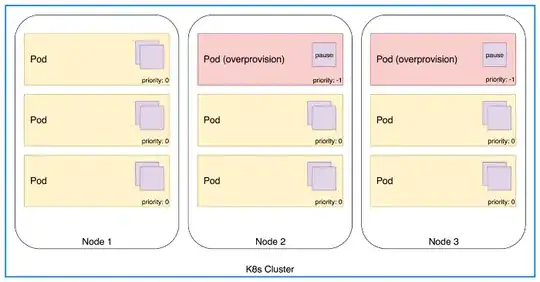

Pod Priority and Preemption are Kubernetes features that allow you to assign priorities to pods. In a nutshell this means that when the cluster is low on resources it can preempt (remove) lower-priority pods in order to make space for higher-priority pods waiting to be scheduled. With pod priority we can run some dummy pods solely for reserving extra space in the cluster which will be freed as soon as “real” pods need to run. The pods used for the reservation should ideally not be using any resources but just sit there and do nothing. You might have heard of pause containers which are already used in Kubernetes for a different purpose. One property of the pause container is that it is mostly just calling the pause syscall which means “sleep until a signal is received”.

To sum it up, we can run a bunch of pause pods in our cluster with the sole purpose of reserving space to trick the cluster-autoscaler into adding extra nodes prematurely. Since these pods have a lower priority than regular pods they will be evicted from the cluster as soon as resources become scarce. The pause pods will then go into pending state which in turn triggers the cluster-autoscaler to add capacity. Overall, this is a quite elegant way to always have a dynamic buffer in the cluster.

- 280

- 1

- 6

- 18