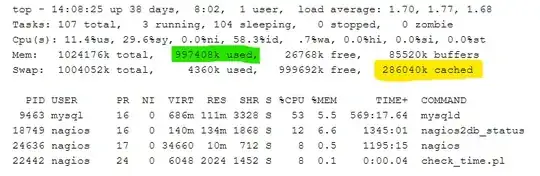

On the book I'm reading, DevOps Troubleshooting, it says that the last value in the swap line is the memory used for file caches (yellow) in Linux so subtracting it to the used memory (green) gives you the actual used RAM:

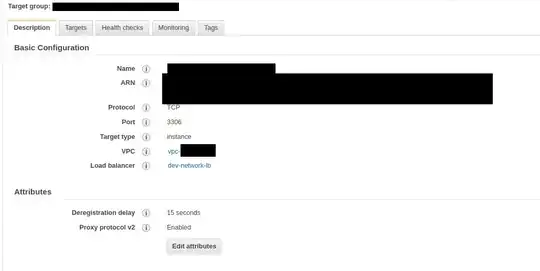

but when I run it on my machine, the output looks like it has a different format with buffer and cache now being on the same line:

Did the output of top change? Why is there a difference here?

I'm familiar with the concept of the Linux file cache, is buff/cache how much it's being used for the file cache in the latter screenshot?