as a disclaimer - I am not a storage guy, so ELI5 ;) I am looking at a ESXi with direct-attached storage (SAS SSDs and HDDs in a RAID1, different datastores). System X shown on the graphics is on the HDD RAID, the other one (System Z) on the SSDs.

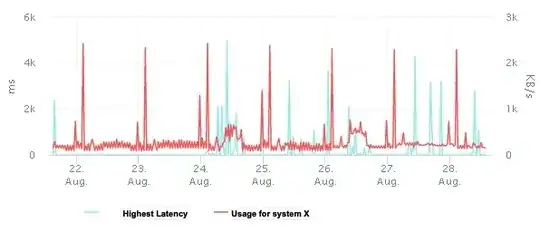

Latency graph from ESXi - System X

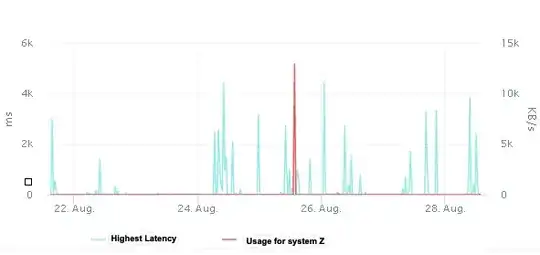

Latency graph from ESXi - System Z

Both systems use databases (alongside other stuff). System X (shown in the graph) queries data from System Z (Postgres), imports it partially and displays it. As you can see we have some pretty high latency here. Also I can see only low throughput for the System X. System X has frequent database locks.

Both systems have CPUs and RAM galore, all I can see is disk performance issues.

W/o any additional infos - the latency seems crazy, am I right? My first advice was to separate the systems to dedicated datastores (and thus underlying disks) as they both tend to have very high IOPS requirements.

Unfortunately I do not have that many details, but I am looking for some good questions to ask in the end. I plan to look into the filesystem and mount options, the disk provisioning (thin / thick), maybe do some tests with dd / hdparm / fio. Check if we have write-back on the RAID. What else should I check?

Thanks, MMF