We are currently hosted on a hosting provider that allows us to set up multiple virtual machines using KVM, where each virtual machine runs on it's own physical box (ie: one hypervisor, one VM with all the memory and CPU allocated to it). Recently we ran into some nasty problems we needed to diagnose (turned out to be stack overflows - lol). In the process we set up DataDog to monitor all our servers and it helped us narrow down the cause and eventually fix it. But we found it super useful we left it all enabled. In the process of learning the tools we keep seeing slow response times during the day for our web sites. Enabling APM tracing we have been able to narrow it down to poor response time from our MySQL cluster. Sometimes we will see MySQL connections take 900ms or longer to get created, and other times dead simple queries like setting the connection collation or the timezone take 600ms or more. Queries that normally run in in less than 800 microseconds.

To diagnose the problem we set up pings to multiple endpoints in our cluster, and have two pings that regularly run slow (4-5s at times!) that simply do noting but return a string (PHP/apache version) or return some client IP information (.net and IIS version). We set those up to see if we would see issues on Linux or IIS without anything else involved, and we do. Oddly during the times we get these outages the CPU on the machines is very low, same on the MySQL cluster. When the queries are running slow, CPU is super low as those boxes generally sit around 5-6% CPU most of the time.

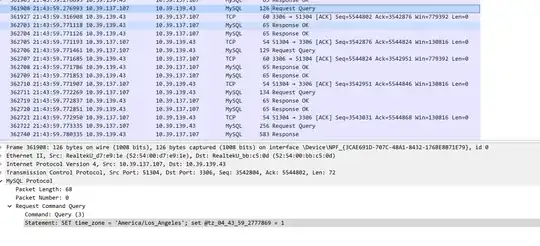

To try to work out if this was a networking issue, we set up captures using Wireshark on windows and dumped the packets while we had some decoration in the queries so we could find them in the packet dumps easily (basically set a MySQL variable in the query that is an encoded version of the current UTC timestamp in microeconds). Using that we were able to correctly match up long MySQL spans in DataDog APM with the packets in the TCP dumps. Looking at the Windows/IIS side, we could see that all the time was spent waiting for the result to come back over the wire from the MySQL server. So the time reported in DataDog for the MySQL query exactly matched up with the time in the data dumps.

So as you can see from the two screen shots, they match up exactly. To determine if the networking issue happened on the MySQL side, we then did the same capture dump again on the Linux machine and saw the exact same thing. MySQL got the request, and a huge number of milliseconds later it sent the reply. So the issue is clearly not networking, but something causing MySQL itself to slow down.

Now what is really odd is that it's not MySQL itself getting blocked up, because the particular box I ran those queries against was only running read queries from one of our windows virtual machines, as a read slave. So it did not have much load on it, and during the time of the queries the CPU load was probably 3% (it has 16 CPU physical cores with dual 8C Xeon CPU's, and 32 vCores allocated to the VM). So clearly not a load issue on the MySQL server, and more importantly from the TCP dumps it's clear that while the query we were interested in was taking a long time to execute, plenty of other queries from other connections came along and got processed with no delay.

Now to top it all off, we have also found in our logging that the MySQL slave will routinely get way behind, 30-40 seconds behind the master. We have seen cases where it got up to 110 seconds behind the mater, which makes zero sense as the machine has low load on it, and it's all on the same local private network that the master database (and web servers) is on. Sometimes those delays in the slave occur around the same time the slow downs happen, and some times they do not.

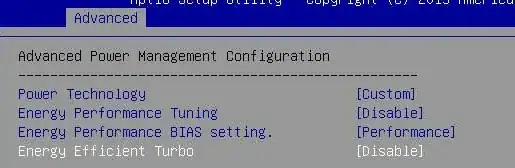

So now that we have conclusively determined that we do not think this is a networking issues, we are starting to think this is some kind of thread deadlocking issue in KVM itself? Especially because we see super odd slow downs in all our virtual machines, some of which have nothing to do with MySQL (such as the static PHP hello file). Since we don't have any control over the KVM layer so we do not know what version it is running on now how it is configured. But the more we look into this perplexing problems, the more the finger is pointing at KVM as the root cause of this, but we have no idea how to resolve it.

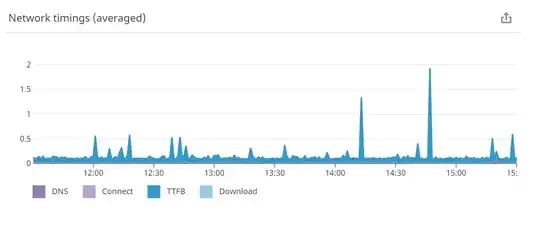

To illustrate the problem, here is a ping of a PHP page that just echo's 'hello' and does nothing else, and the ping times from three AWS servers. Clearly you can see big spikes in there at times.

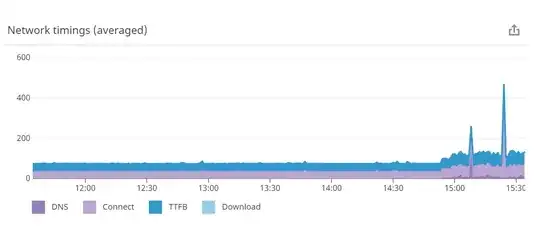

Now you might simply argue, but that is networking! Of course you might have glitches from AWS talking to that server during the day. True enough, but here is a ping during the EXACT same time period from the EXACT same AWS servers to a static page in Apache measured in milliseconds this time (less to do than PHP having to server up even a simple page):

So as you can see it is not external networking either, because the static file ping was never slow. No issues at all. We actually set up that static file ping to run against a second instance of Apache on that box to ensure it has zero load on it to get a baseline. At the end of the ping you can see stuff started going a bit nuts and the ping times are all over the place. That's because we just enabled PHP in that instance and served up the same hello.php file from that second apache instance to see what different it would make. Primarily because the first instance is also serving real live traffic to our wordpress blogs and ad servers (low volume traffic, but its not zero). So clearly once we add something to the mix that uses a lot more CPU, things start to go wonky.

So my question is, has anyone else ever experienced this kind of problem before with KVM, and if so, how do you resolve it? We are on the verge of ditching this KVM solution and migrating either back to dedicated machines again (which we ditched a decade ago), moving to a private VMware cloud or considering moving to Google or Azure (both of which will cost us a lot more money). But I fail to see the point in moving to another cloud architecture like Google or Azure or a private VMware cloud, if they might have similar issues?

Any suggestions?