TL;DR: Question in bold below

Here http://www.howto-expert.com/how-to-create-a-server-failover-solution/ is an (I assume) old blog post, explaining how to setup 2 server machines (one master and one slave), each located at different geographical locations.

The context is about self-hosting a website (+database) service, and make it such that, when master has failed the sanity check (e.g because machine is turned off, or internet connexion is out, or admin is doing updates, etc.), then slave takes over to serve the website(s) to visitors.

The software solution used in here is "DNS Made Easy" which seems to

- spin on a 3rd machine,

- associate the website IP to this 3rd machine (when one has to choose a domain name through a registrar),

- and seems to re-route the visitor to one of the 2 website hosts.

(I would eventually prefer as solution something like HAproxy+KeepAliveD, just because it's free.)

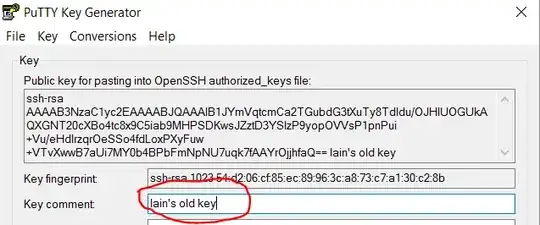

The important illustration from the URL above is this one :

But now, assuming I afforded hardware for a second slave machine precisely in case the master fails, then the investment would be useless if the 3rd machine (the failover monitor on the pic) crashes.

MAIN QUESTION : How to embed the failover monitoring into the 2 machines?

OR alternatively, how is it possible to do failover with only 2 physical machines?

(in order to get 2 points of failure instead of 1)

Questions it implies:

Why people always end up with a single point of failure (the isolated failover monitor) ?

Shall I use KVM to have "2 servers" within each machine (monitor1 + master in master, and monitor2 + slave in slave) or I can install all different services in a machine?

Is it possible to have 2 machines located away from each other that still share the same IP address ?