I have been dealing with this problem for about half a year (had the luxury of time) and hadn't managed to crack it, so I finally resigned and came over here to ask for help from others not just google (our support for vmware run out about 3 years ago and our executives chose not to order prolongation from vmware).

The problem

I have not been dealing with the performance of the virtualisation or VMs, that all works fine. I really got stabbed in the back when I needed to setup new backup software for the VMs. The hosts, storages and backup servers are all equipped with 10GigEth NICs and are connected to the same 10Gig switch. When I want to copy a VMDK from the host and its iSCSI attached storage to the backup server the speed is stable 150Mbit/s. The amount I have to backup each night is about 2-5 TB and with that speed it is not possible. The goal is to increase the copy speed to at least 100MB/s (5TB in about 14 hours).

Topology

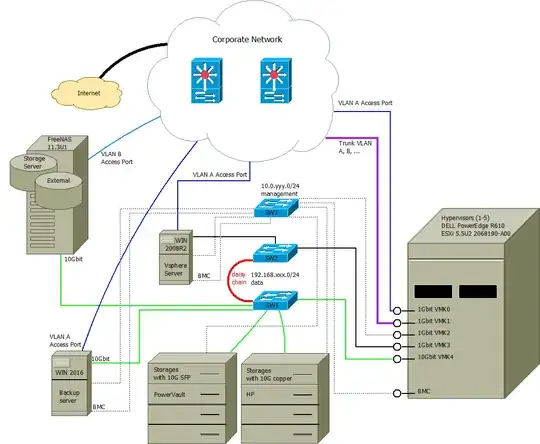

- Network X 192.168.xxx.0/24

- Network Y 10.0.yyy.0/24

- Corporate network (we do not manage this, we only use it) which includes various vlans for physical devices and VMs.

- Network VLAN A

- Network VLAN B

Cluster topology

The 10 Gig Dell switch is really the heart of the cluster, since everything is connected to it by Cat6 cable. The SW2 switch is daisy-cahined to it and serves as connecting point for redundant connection from ESXi host to the X network. There are no other vlans then 1(default) configured on any of those switches. Hosts and servers are all connected to the VLAN A (or B) to be accessible from our offices and have access to the internet as well as to the rest of the corporate network. The Datasotres for the cluster are Those Dell(SFP) and HP(Copper) storages all connected by iSCSI to all five hosts. All ESXi hosts and servers have a copper Cat5 link to the SW3 into network Y where all the BMCs and other management ports are connected too. One of the backup servers has routing enabled to grant acces to the internet on the X network through the VLAN A network. vMotion is enabled on networks X and VLAN A. All 10Gig NICs from devices on network X have jumbo frames enabled and are reporting 10Gb speed full-duplex

The tests

I was testing quite a few backup softwares and since the testing rig had just 100Base NIC I didnt see a problem with network performance then, but when we bought the software and I discovered that the speed won't go further then 150Mbit/s I realised that I need to do some tweaking. What I tried follows. Each test's result speed was 150Mbit/s unless otherwise specified.

- This is a desired usage example. Backup servers are connecting over network X to a host and download all backups (in the form of snapshots) to local storage and/or NAS storage.

- I created a direct link from one of the host's 10Gig ports to the backup server's 10Gig port and tried SCP, WINSCP, SSH and the backup software to download a VM snapshot from the Dell storage.

- I created a NFS storage on one of the Backup servers and migrated a test VM over to it (~500MB/s, 20GB, stable), then I tried methods in Test 2 again.

- I disconnected host ABC(network VLAN A) from the cluster and reconnected it as XYZ(Network X), removed its connection to network VLAN A and its 1Gig connection to X and tried Test 3 again. Migration (~500MB/s, 20GB, stable).

- I fiddled with virtual switch settings and with bandwith policy while trying Tests 1, 3 and 4.

- I tried running 20 backup jobs simultaneously and each of them ran at 150Mbit/s. I then begun starting more jobs and the speed on all of them started dropping around 30-32 simultanously running jobs, so there is at least 550MB/s of throughput available.

The infrastructure

- Five identical Dell PowerEdge R610s (dual Xeon X5660, 200+ GB RAM, 4x GLAN (Broadcom NetXtreme II BCM5709), 1x dual 10GLAN (Intel 82599), no internal storage)

- Three Dell PowerVault Enclosures (10 TB each, 10k SAS HDDs 600GB each)

- One HP MSA 2040 (10 TB, three SSD SAS 300GB disks as cache, rest is 10k SAS HDDs)

- SW1 Dell PowerConnect 8024

- SW2 Cisco 2960G

- SW3 Cisco 2950

- Backup server Dell PowerEdge R530

- Vsphere server Sunfire (something-old)

I really canť tell where the problem is, but in my opinion it would be in the ESXi. VMs can reach 500MB/s between each other on different hosts without problems, but the hosts themselves cannot.

I will really appreciate every response to this and will provide clarification to every clouded detail.

Update 1(final)

We have purchased a license of Veeam and configured incremental backups and timed the backups so they don't overlap much. We have virtually eliminated the problem by that setup, but the slow speed remains almost the same per connection. Bottleneck is identified as source and we can confidently track the data flow from start to end. We have dug through every network setting in every device or vm that had anything to do with the flow and found nothing. The only thing we can safely state is that the problem lies within the esxi5.5 host and its iscsi connected datastores.

This problem will remain a mystery since we are rotating out of this environment and we would repurpose it significantly. Therefore this question would probably be left without answer.