i am creating an linux(centos7) nfs ssd datastore for vmwware esxi.

i am using an HP dl360e with p420i(1GB cache) and 6 x ssdsc2ba200g3p 200GB ssd 6g drivers(HP part number 730053-B21).

Using fio i am seeing that the array caps at 70k iops no matter if its RAID10 or RAID0. I have enabled ssd smartcache with little differences. I also tuned the filesystem with tuned-adm ( i selected the throughput perfomance profile).

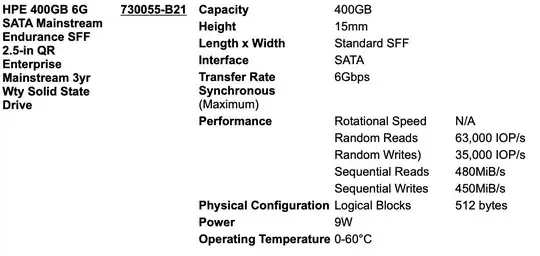

I think i am missing something as i have another server with similar specs that his only difference is the ssd drivers (4 x 691866-B21 - HP 400GB 6G SATA Mainstream Endurance), a little faster cpu and p420(1gb flash) instead of p420i.

That particular one can go up to 100k iops.

both servers are being benchmarked while idle with the following command :

fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test \

--filename=random_read_write.fio --bs=4k --iodepth=64 --size=4G \

--readwrite=randrw --rwmixread=75

Any thoughts? Can the drivers be the bottleneck? I don`t think so was the 6 drive RAID0 has the exact same result.

thank in advance