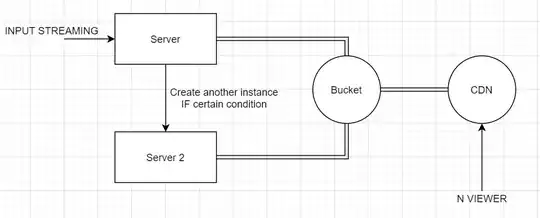

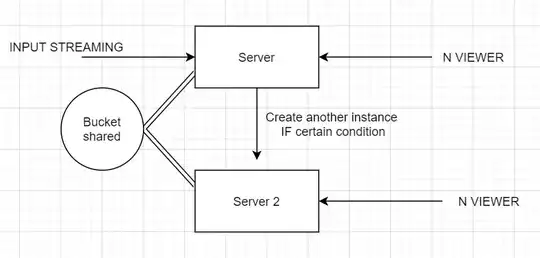

i'm building a scalable infrastructure for my nginx RTMP server. I've: nginx + arut-rtmp-module + ffmpeg on the server. This is the scheme of the first architecture inside a managed group instance

Problem in this scheme is easy: Input streaming is only on Server, viewers on Server 2 will not be able to watch the streaming.

Problem in this scheme is easy: Input streaming is only on Server, viewers on Server 2 will not be able to watch the streaming.

EDIT2: In this case my browser working fine: get directly all the .ts file from the server and it works! Obviously this solution, like already said, it's not scalable.

So...I think we need something shared from all new instances.

I've involved a google bucket mounted with gcsfuse on each instance. (i'm using always a managed group instance)

Problem in this scenario: while the server that get in input the streaming is creating the .ts segments, each time segment is created, bucket is updated with the .ts of 0 byte. When the server finish the writing of the .ts, bucket get the updated .ts file. So it's not really "shared"...

EDIT2: In this case my browser loads only the first 1/2 .ts segments, than it stuck on loading m3u8 in a loop.

So, i've tested these solutions but are not working. I'm wronging something here? Bucket is not the right thing to use?

Thank's

P.s. I've added a cloud CDN on bucket, so from my application i can get the .ts segment directly from the CDN. Problem is that after the 2/3.ts my application get only m3u8 but can't get the others .ts (my cdn has .ts!)

EDIT1: It's like as after the first loading of m3u8, it take the first .ts already loaded....but after can't get the next .ts!

EDIT3:This is what my browser load:

the m3u8 loaded in loop is:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-TARGETDURATION:10

#EXT-X-DISCONTINUITY

#EXTINF:4.167,

0.ts

#EXTINF:4.167,

1.ts

#EXTINF:6.666,

2.ts

#EXTINF:4.167,

3.ts

The real m3u8 on the bucket is:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-TARGETDURATION:10

#EXT-X-DISCONTINUITY

#EXTINF:4.167,

0.ts

#EXTINF:4.167,

1.ts

#EXTINF:6.666,

2.ts

#EXTINF:4.167,

3.ts

#EXTINF:6.133,

4.ts

#EXTINF:4.734,

5.ts

#EXTINF:10.016,

6.ts

#EXTINF:6.450,

7.ts

#EXTINF:8.317,

8.ts

#EXTINF:7.183,

9.ts

#EXTINF:5.850,

10.ts

#EXTINF:2.050,

11.ts

EDIT3: Uhmmmm....it's maybe a cache problem of the CDN? In this case, where can i edit cache configurations?

EDIT4: This is the current scheme that give me the problem of the file m3u8 not correctly updated on my player

UPDATE:

Tried both application concurrently (just changing the source that i get on my player):

- Pointing to the m3u8 directly on server (where i have the bucket mounted with gcsfuse)

- Pointing to the m3u8 on the CDN

1st case works: m3u8 is updated every time. 2nd case m3u8 loads the 1st configuration (tried for example opened after 20 ts segments already created). It loads the first m3u8...then reload the same versione of the file in a loop.

Streaming, like i said, was the same: just the source to m3u8 was get from different ways: directly on bucket mounted on the server or directly from cdn (that is on the same bucket).

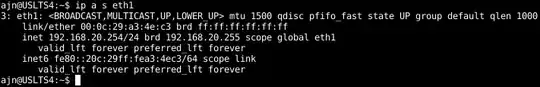

UPDATE2: Retried everything, if i download the m3u8 from my cdn...i get the file not updated too. If i take the same file from bucket, it's updated!! I've tried to point my player to storage.google, but from my website i get a cors error...tried to changed the cors setting from console (with gsutil), but nothing to do. How can i prevent caching on CDN? I've already setted header to no-cache and no-store :/

Here is my cloud CDN cache hit ratio....