I've looked for a while but can't seem to find an encoding/payload setup that would allow me to simply stream losslessly from my host to client (both are on setup where bandwidth is not a issue).

I'm currently using these two pipelines to get by, but I'm not thrilled with the jpeg artifacts introduced:

cv::VideoWriter writer_h264(

"appsrc ! videoconvert ! "

"x264enc pass=quant quantizer=0 tune=zerolatency "

"speed-preset=superfast byte-stream=true cabac=false ! "

"rtph264pay ! udpsink host=127.0.0.1 port=5000",

0, // fourcc

30, // fps

cv::Size(200, 200),

false); // isColor

// View with:

// // gst-launch-1.0 -v udpsrc port=5000 ! "application/x-rtp, media=(string)video, clock-rate=(int)90000, encoding-name=(string)H264, payload=(int)96" ! rtph264depay ! h264parse ! avdec_h264 ! videoconvert ! autovideosink

cv::VideoWriter writer_jpeg(

"appsrc !"

"videoconvert ! video/x-raw,format=YUY2 ! jpegenc ! rtpjpegpay ! udpsink host=127.0.0.1 port=5001",

0, // fourcc

30, // fps

cv::Size(200, 200),

false); // isColor

// View with:

// gst-launch-1.0.exe udpsrc port=5001 ! application/x-rtp,media=video,encoding-name=JPEG ! rtpjpegdepay ! jpegdec ! videoconvert ! autovideosink

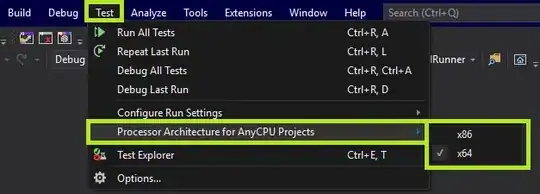

Our appsource is a simple CV_8UC1 grayscale bitmap, and I'd prefer it made it to the client in its original form (it's used for both display and analytic purposes). The png decoder/encoder seems like an obvious choice, except that there is no png payloader. The two pipelines above produce identical results, which tells me that its the jpegenc/rtpjpegpay that are likely introducing the problem. You can see this in the attached image (first image is original, latter two are from the clients).

UPDATE:

Based on @Florian's advice below, I tried using the rtpvraw payload elements, using these pipelines:

GST_DEBUG=3 /d/gstreamer-x/1.0/msvc_x86_64/bin/gst-launch-1.0 -v multifilesrc location="frame%02d.png" loop=true index=10 caps="image/png,framerate=\(fraction\)30/1" ! pngdec ! videoconvert ! rtpvrawpay ! udpsink host="127.0.0.1" port="5000"

./gst-launch-1.0 udpsrc port=5000 caps="application/x-rtp,media=video,clock-rate=90000,encoding-name=RAW,sampling=BGR,depth=(string)8,width=(string)200,height=(string)200,colorimetry=SMPTE240M" ! rtpvrawdepay ! videoconvert ! queue ! autovideosink

(I derived the caps for the client by examining the debug output above). It seems better, although there is visible antialiasing in the output. Perhaps this is just something that autovideosink does?