I'm creating a data factory pipeline to copy data from blob storage to table storage. The table may or may not exist in table storage and needs to be created if it doesn't exist. The Authentication method for my table storage linked service must be a SAS token (the value of which I'm grabbing from Key Vault).

Everything about my linked service configuration and pipeline works fine, except that the pipeline fails if the table sink doesn't exist. I've tried a similar configuration using Account Key authentication that works, but I'm looking for a way to do this with SAS token authentication.

Current Configuration

This is my Linked Service configuration for the table storage account:

{

"name": "Table Storage Account",

"type": "Microsoft.DataFactory/factories/linkedservices",

"properties": {

"parameters": {

"StorageAccountName": {

"type": "string"

},

"TableName": {

"type": "string"

}

},

"annotations": [],

"type": "AzureTableStorage",

"typeProperties": {

"sasUri": "https://@{linkedService().StorageAccountName}.table.core.windows.net/@{linkedService().TableName}",

"sasToken": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "Key Vault",

"type": "LinkedServiceReference"

},

"secretName": {

"value": "@{linkedService().StorageAccountName}-sas",

"type": "Expression"

}

}

}

}

}

These are the SAS Token settings:

sv=2021-12-02&ss=t&srt=sco&sp=rwlacu&se=2023-05-02T03:00:00Z&st=2023-04-19T16:09:39Z&spr=https&sig=[REDACTED]

This is the Data Set configuration used by the Copy Data activity:

{

"name": "StorageTable",

"properties": {

"description": "Dataset for the azure table account.",

"linkedServiceName": {

"referenceName": "Table Storage Account",

"type": "LinkedServiceReference",

"parameters": {

"StorageAccountName": {

"value": "@dataset().StorageAccountName",

"type": "Expression"

},

"TableName": {

"value": "@dataset().TableName",

"type": "Expression"

}

}

},

"parameters": {

"StorageAccountName": {

"type": "string"

},

"TableName": {

"type": "string"

}

},

"annotations": [],

"type": "AzureTable",

"schema": [],

"typeProperties": {

"tableName": {

"value": "@dataset().TableName",

"type": "Expression"

}

}

},

"type": "Microsoft.DataFactory/factories/datasets"

}

This is the sink configuration for the Copy Data activity (though nothing seems relevant here):

"sink": {

"type": "AzureTableSink",

"azureTableInsertType": "replace",

"azureTablePartitionKeyName": {

"value": "PartitionKey",

"type": "Expression"

},

"azureTableRowKeyName": {

"value": "RowKey",

"type": "Expression"

},

"writeBatchSize": 10000

}

With this configuration, all connections can be validated successfully in the portal, but ADF won't create a table if it doesn't exist.

Example Error Message

ErrorCode=FailedStorageOperation,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=A storage operation failed with the following error '0:The table specified does not exist. RequestId:0c4fc844-d002-0001-0d87-738fd1000000 Time:2023-04-20T12:58:38.1032528Z'.,Source=,''Type=Microsoft.WindowsAzure.Storage.StorageException,Message=0:The table specified does not exist. RequestId:0c4fc844-d002-0001-0d87-738fd1000000 Time:2023-04-20T12:58:38.1032528Z,Source=Microsoft.WindowsAzure.Storage,StorageExtendedMessage=0:The table specified does not exist. RequestId:0c4fc844-d002-0001-0d87-738fd1000000 Time:2023-04-20T12:58:38.1032528Z,,'

Other Attempts

Update SAS URI

I changed the "sasUri" to https://@{linkedService().StorageAccountName}.table.core.windows.net (removing the table name), hoping that with the base account URI, data factory would figure out which REST URL paths to use depending on the operation.

This change broke the connection validation in the portal (which I ignored for testing purposes), but the pipelines still worked fine (probably because the Table Name was still provided in the Data Set). Unfortunately, it still did not create tables that do not exist.

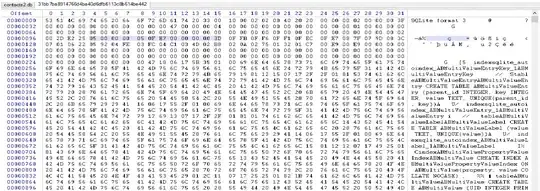

Generate and copy the

Generate and copy the

Then add these parameters to respective linked service properties and table.

Then add these parameters to respective linked service properties and table.

Then passed values to dataset properties storage account name and table name as

Then passed values to dataset properties storage account name and table name as