I tried to reproduce the same in my environment I have created a virtual network gateway vnet local network gateway like below:

In virtual network added gateway subnet like below:

created local network gateway :

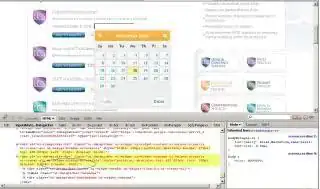

On-premise try to configure Routing and remote access role in tools -> select custom configuration ->Vpn access, Lan routing ->finish

in network interface select -> New demand-dial interface -> in vpn type select IPEv2 and in the destination address screen provide public IP of virtual network gateway

Now, try to create a connection like below:

Now, I have created an aks cluster with pod like below:

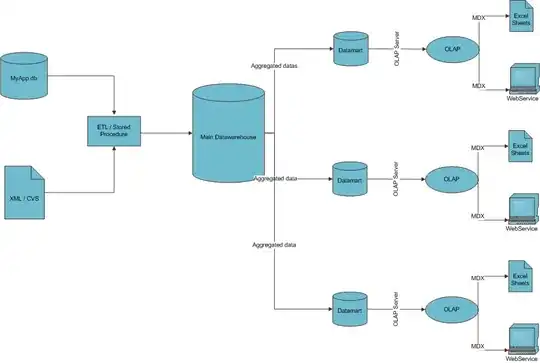

To communicate with pod make sure to use Azure Container Networking Interface (CNI) every pod gets an IP address from the subnet and can be accessed directly each pod receives an IP address and can directly communicate with other pods and services.

you can AKS nodes based on the maximum number of pod can support. Advanced network features and scenarios such as Virtual Nodes or Network Policies (either Azure or Calico) are supported with Azure CNI.

When using Azure CNI, Every pod is assigned a VNET route-able private IP from the subnet. So, Gateway should be able reach the pods directly. Refer

- You can use AKS's advanced features such as virtual nodes or Azure Network Policy. Use Calico network policies. network policy allows an traffic between pods within a cluster and communicated

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: backend-policy

spec:

podSelector:

matchLabels:

app: backend

ingress:

- from:

- podSelector:

matchLabels:

app: frontend

To more in detail refer this link:

Azure configure-kubenet - GitHub

Network connectivity and secure in Azure Kubernetes Service | Microsoft