I'm using Azure Synapse Link for Dataverse to synchronize 13 tables from a Dynamics instance to Azure Synapse, but I'm seeing far more transactions than expected in the storage account metrics and it's leading to excessive charges. It seems related to the snapshot/partition process, but I'm not 100% sure. Has anyone run across this before and know how to fix it?

Background / Other Info

- I first set up this link on Feb 22. When I did that, the tables initially synchronized fairly quickly, but when I went to query them, I'd get the error

A transport-level error has occurred when receiving results from the server. (provider: TCP Provider, error: 0 - The specified network name is no longer available.) - I tried deleting the link and recreating it. That did not help.

- I tried synchronizing just the data and not connecting to an Azure Synapse Workspace and that helped, but I need the workspace database.

- At different times during testing, I would get the error

Database 'dataverse_xxx_xxx' on server 'xxx-ondemand' is not currently available. Please retry the connection later. If the problem persists, contact customer support, and provide them the session tracing ID of '{XXXXXXXX-XXX-XXXX-XXXX-XXXXXXXXXXX}'.When this happened, the built-in serverless pool would showTemporarily Unavailablefor some time, but would eventually come back. - I was not able to query any of the metadata tables until all of the snapshot/partition tables were created and this took multiple hours, which is a behavior I did not see in testing. Further, the lake database in Synapse would show all of the

_partitiontables, but the same database in SSMS would only show a subset of them. - If I hook the storage account up to a Log Analytics workspace, I can't find the

AuthenticationErrortransactions, but that could be my lack of knowledge in that space. - Transactions by API Name shows a lot of

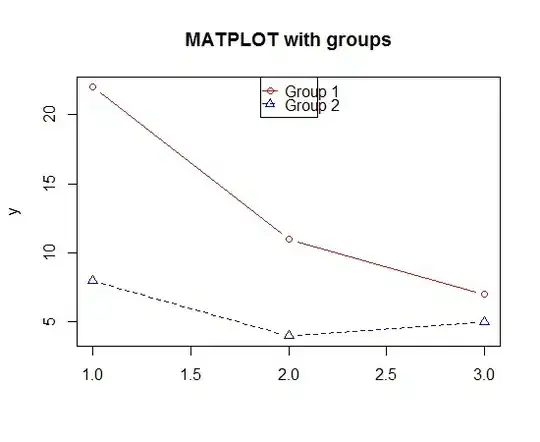

ListFilesystemDir,GetFilesystemProperties, andUnknown. A similarly configured workspace only shows transaction peaks every hour forGetBlobPropertiesandListBlobs. - The counts and shape of the Transaction Errors and Transactions by API Name graphs track each other.

- The Synapse workspace was deployed using an ARM template and should match our dev and test environments.

- I initially let the process run for several days, thinking that things would settle down, but they did not after 4 days.

Update - 2023-02-28

I hooked up the storage account to a Log Analytics workspace today and have confirmed that the issue seems to be related to the snapshot process. Every Snapshot directory is being queried once every 45 seconds. There are 747 URIs returned from the query below, which means 59,760 transactions every hour. Multiplied by 3, since the same behavior exists for ListFilesystemDir1, GetFilesystemProperties, and Unknown (I couldn't find this in the logs), and we get 179,280 transactions every hour. I'm not seeing any transactions with an AuthenticationError, which seems strange. Everything has a status text of Success.

Update - 2023-03-02

- I set up a new Synapse workspace using the same ARM template that was used to deploy the workspace with the issue. The new Synapse workspace is exhibiting the same behavior.

- The behavior exists regardless of which dataverse environment is linked

Update - 2023-03-09

- The transactions have settled down on the test Synapse workspaces that I set up on 2023-03-02, but the issue remains for my production workspace. Both test workspaces showed a decrease in transactions on 2023-03-05 between 9 AM and 9 PM. The test workspaces are empty except for the dataverse lake database.

Update - 2023-03-13

Transactions in my production workspace came back to normal levels on March 9, shortly before I spoke to Microsoft support. They suspect it was related to Synapse Link for Dataverse issue that was resolved around the same time but aren't 100% sure. I sent additional log information to help troubleshoot.