I wanted to collect sample based on age with a condition on the Failure status. I am interested in 3 days old serial number. However, I don't need healthy serial number that is less than 3 days old, but I want to include all failed serial numbers that are less than 3 days old or exactly 3 days old. For example, C failed in January 3rd, so I need to include January 1st and 2nd for Serial C in my new sample. Serial D failed in January 4th, so I need January 3rd, 2nd, and 1st data for D. For A and B, I need 5th, 4th, and 3rd January data that is total 3 days. I don't E and F as they are younger than 3 days healthy observations. In summary, I need failed samples with 3 days before the actual failure and recent most recent 3 days of healthy observations.

url="https://gist.githubusercontent.com/JishanAhmed2019/6625009b71ade22493c256e77e1fdaf3/raw/8b51625b76a06f7d5c76b81a116ded8f9f790820/FailureSample.csv"

from pyspark import SparkFiles

spark.sparkContext.addFile(url)

df=spark.read.csv(SparkFiles.get("FailureSample.csv"), header=True,sep='\t')

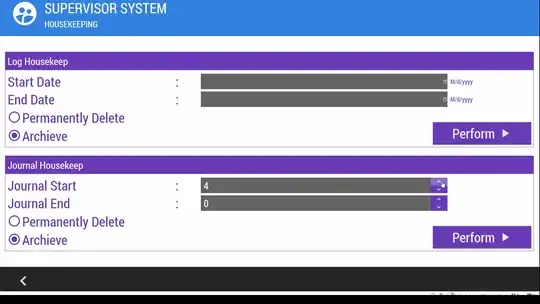

Current format:

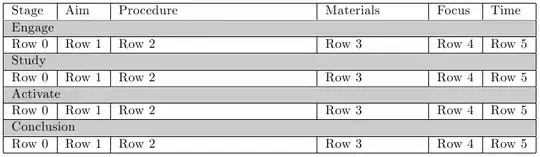

Expected sample: