Background info

There is nothing unusual in this PDF compared to any other.

The text like any PDF is written in authors random order so for example the 1st PDF body Line (港区内認可保育園等一覧) is the 1262nd block of text added long after the table was started. To hear written order we can use Read Aloud, to verify character and language recognition but unless the PDF was correctly tagged it will also jump from text block to block

So internally the text is rarely tabular the first 8 lines are

1 認可保育園

0歳 1歳 2歳3歳4歳5歳 計

短時間 標準時間

001010 区立

3か月

3455-

4669

芝5-18-1-101

Thus you need text extractors that work in a grid like manner or convert the text layout into a row by row output.

This is where all extractors will be confounded as to how to output such a jumbled dense layout and generally ALL will struggle with this page.

Hence its best to use a good generic solution. It will still need data cleaning but at least you will have some thing to work on.

If you only need a zone from the page it is best to set the boundary of interest to avoid extraneous parsing.

Your "standalone Tabula tool" output is very good but could possibly be better by use pdftotext -layout and adjust some options to produce amore regular order.

Your Question

the difference in encoding output?

The Answer

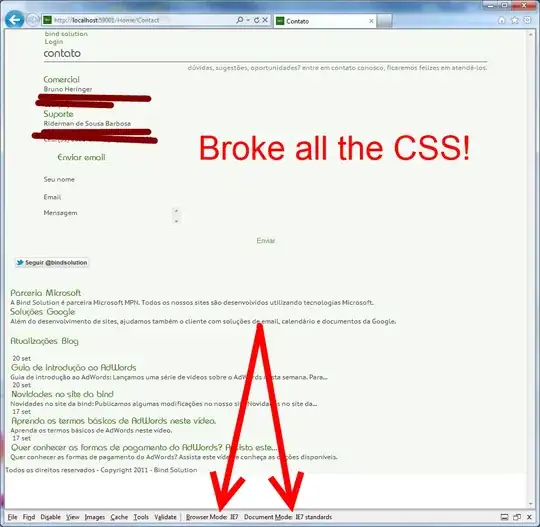

The output from pdf is not the internal coding, so the desired text output is UTF-8, but PDF does not store the text as UTF-8 or unicode it simply uses numbers from a font character map. IF the map is poor everything would be gibberish, however in this case the map is good, so where does the gibberish arise? It is because that out part is not using UTF-8 and console output is rarely unicode.

You correctly show that console needs to be set to Unicode mode then the output should match (except for the density problem)

The density issue would be easier to handle if preprocessed in a flowing format such as HTML

or using a different language

or using a different language