You can consider this code to convert json to NEWLINE_DELIMITED_JSON and load the data into BigQuery.

from google.cloud import bigquery

from google.oauth2 import service_account

import json

client = bigquery.Client(project="project-id")

dataset_id = "dataset-id"

table_id = "table-id"

list_dict =[

{

"name": "jack",

"id": "101",

"info": [

{

"place": "india"

}

]}]

with open ("sample-json-data.json", "w") as jsonwrite:

for item in list_dict:

jsonwrite.write(json.dumps(item) + '\n') #newline delimited json file

dataset_ref = client.dataset(dataset_id)

table_ref = dataset_ref.table(table_id)

job_config = bigquery.LoadJobConfig()

job_config.source_format = bigquery.SourceFormat.NEWLINE_DELIMITED_JSON

job_config.autodetect = True

with open("sample-json-data.json", "rb") as source_file:

job = client.load_table_from_file(

source_file,

table_ref,

location="us", # Must match the destination dataset location.

job_config=job_config,

) # API request

job.result() # Waits for table load to complete.

print("Loaded {} rows into {}:{}.".format(job.output_rows, dataset_id, table_id))

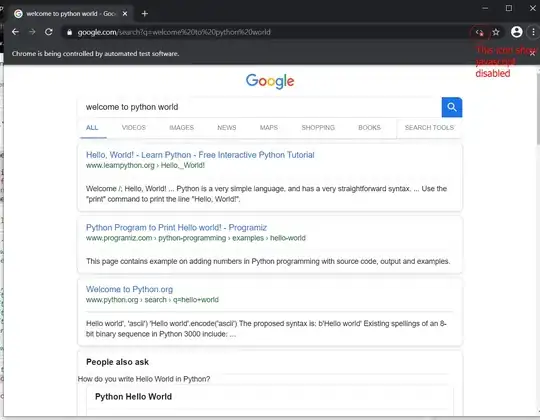

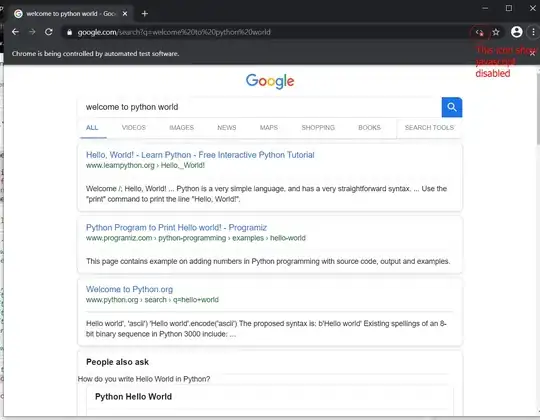

Output:

Your json data has to be in the format:

Your json data has to be in the format:

{"id":"1","first_name":"John","last_name":"Doe","dob":"1968-01-22","addresses":[{"status":"current","address":"123 First Avenue","city":"Seattle","state":"WA","zip":"11111","numberOfYears":"1"}]}

Also you can consider this code by speciying BigQuery schema and storing json data file in GCS bucket.